Nextcloud on Elastic File System and Elastic Container Service

02 August 2023

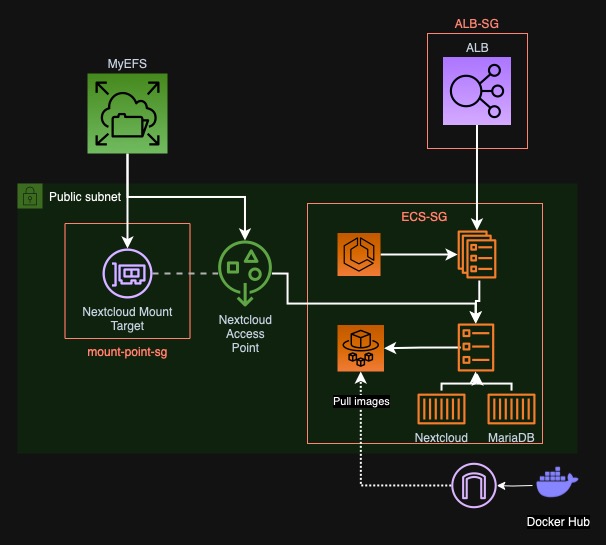

When preparing for AWS SysOps Associate Certification, I wondered how can I learn more about some of the components I never used. I decided to use Elastic File System which is an infinitely expanding, managed NFS service. We recently ran out of space on our Nextcloud instance's EBS volume, so it was a perfect opportunity to test the future planned setup: Nextcloud data kept on an NFS volume. To challenge myself even more, I would like to use Elastic Container Service to start Nextcloud+Apache container for testing with an ephemeral MariaDB sidecar. Today, we will create the following system:

Our entrypoint will be an ALB which will forward traffic to an ECS service with Apache serving us Nextcloud. The ECS task will have an EFS mount to keep the files. I will skip the code for VPC and subnets, as it is not the focus of this post and is trivial to create. Follow the repository commits for the full code.

Repository for this post here.

Creating EFS

We will start by defining an IAM role for the ECS task, so that we can specify

policies for EFS. The role will also have attached

AmazonECSTaskExecutionRolePolicy, so that later we can upload the logs to

CloudWatch.

resource "aws_iam_role" "Task-Role" {

name = "Task-Role"

assume_role_policy = jsonencode({

Version = "2012-10-17"

Statement = [{

Effect = "Allow"

Principal = { Service = "ecs-tasks.amazonaws.com" }

Action = "sts:AssumeRole"

}]

})

}

resource "aws_iam_role_policy_attachment" "Task-Role-Attachment" {

role = aws_iam_role.Task-Role.name

policy_arn = "arn:aws:iam::aws:policy/service-role/AmazonECSTaskExecutionRolePolicy"

}

The most basic EFS resource is aws_efs_file_system. It will create the actual

place where data is stored. It is very simple to define and don't have too many

parameters. We will use the default values but you can also specify throughput

mode and performance mode for a higher cost.

# Find default encryption key

data "aws_kms_key" "aws-efs" {

key_id = "alias/aws/elasticfilesystem"

}

resource "aws_efs_file_system" "MyEFS" {

creation_token = "MyEFS"

encrypted = true

kms_key_id = data.aws_kms_key.aws-efs.arn

tags = { Name = "MyEFS" }

}

But to actually use the system we need at least one mount point. It creates a network interface in a specified subnet that will allow instances to mount the root of the filesystem IAM or resource policies state so. However, this setup is not ideal - we would then need to manage multiple filesystems and policies if we wanted to separate applications from seeing each others' data. AWS offers us also Access Points. Access Points are similar to mount points (at least one mount point is required for them) but use AWS driver to connect to them. They can also fake the root of the filesystem to be a directory within EFS, which allows for more granular control over which instance can access which part of the filesystem - all within a single EFS instance. The mount point will also need a security group. For now we will use an empty one, but later we will allow the traffic from ECS.

resource "aws_efs_mount_target" "Nextcloud-Mount-Target" {

file_system_id = aws_efs_file_system.MyEFS.id

subnet_id = aws_subnet.public[0].id # first public subnet

security_groups = [aws_security_group.mount-point-sg.id]

}

resource "aws_efs_access_point" "Nextcloud-Access-Point" {

file_system_id = aws_efs_file_system.MyEFS.id

root_directory {

path = "/nextcloud" # name of the root invisible to the application

creation_info {

owner_gid = 33 # user and group owning `/nextcloud` (www-data)

owner_uid = 33

permissions = "777" # permissions of the root directory (just for testing)

}

}

posix_user {

gid = 33 # enforce www-data ownership of all the files

uid = 33

}

tags = { Name = "Nextcloud-Access-Point" }

}

resource "aws_security_group" "mount-point-sg" {

vpc_id = aws_vpc.My-VPC.id

name = "mount-point-sg"

egress {

from_port = 0

to_port = 0

protocol = "-1"

cidr_blocks = ["0.0.0.0/0"]

ipv6_cidr_blocks = ["::/0"]

}

}

Next comes aws_efs_file_system_policy - a resource policy to control who can

use our new filesystem. We will allow Task-Role role to use the access point,

that will be specified in the condition.

resource "aws_efs_file_system_policy" "EFS-Policy" {

file_system_id = aws_efs_file_system.MyEFS.id

policy = jsonencode({

Version = "2012-10-17"

Id = "EFSPolicy"

Statement = [{

Sid = "Allow ECS access to EFS"

Effect = "Allow"

Principal = { AWS = [aws_iam_role.Task-Role.arn] }

Action = [ "elasticfilesystem:ClientMount", "elasticfilesystem:ClientWrite" ]

Resource = aws_efs_file_system.MyEFS.arn

Condition = {

# Only this access point

StringEquals = { "elasticfilesystem:AccessPointArn" = aws_efs_access_point.Nextcloud-Access-Point.arn }

}

}]

})

}

Creating the Application Load Balancer

Before we even start the containers, it would be nice to have a way to check if they are outputting anything besides the logs. We will create an ALB with a HTTPS listener. If you don't don't have a domain to get ACM certificate, you can use HTTP instead. The load balancer will be running in all public subnets. I will use my HTTP listener to redirect to HTTPS instead. We will also use placeholder response for HTTPS until we create a target group.

resource "aws_alb" "ALB" {

subnets = aws_subnet.public[*].id

security_groups = [aws_security_group.ALB-SG.id]

}

data "aws_acm_certificate" "my-domain" {

domain = var.Domain

statuses = ["ISSUED"]

}

resource "aws_alb_listener" "HTTPS" {

load_balancer_arn = aws_alb.ALB.arn

port = 443

protocol = "HTTPS"

ssl_policy = "ELBSecurityPolicy-2016-08"

certificate_arn = data.aws_acm_certificate.my-domain.arn

default_action {

type = "fixed-response"

fixed_response {

content_type = "text/plain"

message_body = "Hello world!"

status_code = "200"

}

}

}

resource "aws_alb_listener" "HTTP" {

load_balancer_arn = aws_alb.ALB.arn

port = 80

protocol = "HTTP"

# Redirect to HTTPS

default_action {

type = "redirect"

redirect {

port = "443"

protocol = "HTTPS"

status_code = "HTTP_301"

}

}

}

Of course, ELB requires a security group. Create a one that opens ports 80 and 443 to everyone (or just your IP if you want to be more secure).

resource "aws_security_group" "ALB-SG" {

name = "ALB-SG"

vpc_id = aws_vpc.My-VPC.id

ingress {

from_port = 80

to_port = 80

protocol = "tcp"

cidr_blocks = ["0.0.0.0/0"]

ipv6_cidr_blocks = ["::/0"]

}

... # Repeat same ingress for 443

egress {

... # Egress to everywhere

}

}

Running Nextcloud on ECS

Let's start by defining environment variables for Nextcloud and MariaDB

containers. AWS way to supply the variables is not the most convenient, so we

will convert our standard maps to a list of Name and Value objects for AWS.

locals {

HTTP_PORT = 80

DB_HOST = "nextcloud-db"

DB_DATABASE = "nextcloud"

DB_USER = "nextcloud"

DB_PASSWORD = "nextcloud"

MARIADB_ENV_VARIABLES = {

MARIADB_ROOT_PASSWORD = "234QWE.rtysdfg"

MARIADB_DATABASE = local.DB_DATABASE

MARIADB_USER = local.DB_USER

MARIADB_PASSWORD = local.DB_PASSWORD

}

NEXTCLOUD_ENV_VARIABLES = {

MYSQL_HOST = "127.0.0.1" # The same task uses local loopback

MYSQL_DATABASE = local.DB_DATABASE

MYSQL_USER = local.DB_USER

MYSQL_PASSWORD = local.DB_PASSWORD

NEXTCLOUD_ADMIN_USER = "admin"

NEXTCLOUD_ADMIN_PASSWORD = "admin"

NEXTCLOUD_DATA_DIR = "/nextcloud"

}

# Normalize to AWS format

NEXTCLOUD_ENV_VARIABLES_AWS = [

for key, value in local.NEXTCLOUD_ENV_VARIABLES : {

name = key

value = value

}

]

MARIADB_ENV_VARIABLES_AWS = [

for key, value in local.MARIADB_ENV_VARIABLES : {

name = key

value = value

}

]

}

In the above example some of the variables are a outside the maps so that in

case of changes, we don't need to repeat. The _AWS lists are normalized for

ECS task definition.

Now let's write our security group. It will allow traffic from ALB on port 80 and self traffic on port 3306 - between the Nextcloud and MariaDB containers.

resource "aws_security_group" "ECS-SG" {

name = "ECS-SG"

vpc_id = aws_vpc.My-VPC.id

ingress {

from_port = local.HTTP_PORT

to_port = local.HTTP_PORT

protocol = "tcp"

security_groups = [aws_security_group.ALB-SG.id]

}

ingress {

from_port = 3306

to_port = 3306

protocol = "tcp"

self = true

}

egress {

... # Egress to everywhere

}

}

To be able to even connect to the ECS instances from ALB, we need to define a target group that ECS service will update on container start or stop.

resource "aws_alb_target_group" "ECS-TG" {

name = "ECS-Nextcloud"

port = local.HTTP_PORT

protocol = "HTTP"

vpc_id = aws_vpc.My-VPC.id

target_type = "ip" # For Fargate ECS, it just uses IP type

health_check {

path = "/"

port = "traffic-port"

protocol = "HTTP"

matcher = "200-499" # Client side errors are fine

}

}

Next comes a long part where we have to define the containers. We will start with the basic parameters like roles, CPU, memory, etc.

resource "aws_ecs_task_definition" "Nextcloud-Task" {

cpu = 1024

memory = 2048

network_mode = "awsvpc"

requires_compatibilities = ["FARGATE"]

execution_role_arn = aws_iam_role.Task-Role.arn

task_role_arn = aws_iam_role.Task-Role.arn

family = "Nextcloud-Maria-Task"

volume {

... # Here we will define EFS volume

}

container_definitions = jsonencode([ ... ]) # Here we will define containers

}

Container definitions are written in JSON. There's a helper in Terraform to write them as data source but it doesn't have any suggestions, as the actual definition is also written as JSON string. So, let's start with the volume. This will be the contents of our volume block. We will enforce encryption for EFS and use access point with IAM authentication to create a volume.

volume {

name = "nextcloud-storage"

efs_volume_configuration {

file_system_id = aws_efs_file_system.MyEFS.id

transit_encryption = "ENABLED"

transit_encryption_port = 2049

authorization_config {

access_point_id = aws_efs_access_point.Nextcloud-Access-Point.id

iam = "ENABLED"

}

}

}

Our first container will be MariaDB as it is simpler and shorter. One of the

objects in container_definitions array will look like this.

{

name = local.DB_HOST

image = "mariadb:10.6"

essential = true

portMappings = [{

containerPort = 3306

hostPort = 3306

protocol = "tcp"

}]

environment = local.MARIADB_ENV_VARIABLES_AWS

logConfiguration = {

logDriver = "awslogs"

options = {

awslogs-group = "/ecs/nextcloud-logs"

awslogs-region = "eu-central-1"

awslogs-stream-prefix = "maria"

}

}

}

Starting from the top, we first set the name of our container. We will make it

equal to the planned hostname for the database, even though in awsvpc mode, we

will access it through 127.0.0.1. Next we choose the image that will be pulled

from Docker Hub. essential = true means that if this container fails, the task

will be recreated. We will also expose port 3306 to the outside - it is

protected by security group either way. containerPort and hostPort have to

be equal in awsvpc mode. Next we will pass the environment variables we

defined earlier. Finally, the last long block is configuration for the

CloudWatch Logs which is quite self-explanatory. To be sure that it will work,

create /etc/nextcloud-logs group in advance.

Now it's time to define the Nextcloud container with Apache.

{

name = "Nextcloud"

image = "nextcloud:latest"

essential = true

portMappings = [{

containerPort = local.HTTP_PORT

hostPort = local.HTTP_PORT

protocol = "tcp"

}]

logConfiguration = {

logDriver = "awslogs"

options = {

awslogs-group = "/ecs/nextcloud-logs"

awslogs-region = "eu-central-1"

awslogs-stream-prefix = "ecs"

}

}

environment = concat(

local.NEXTCLOUD_ENV_VARIABLES_AWS,

[{

name = "NEXTCLOUD_TRUSTED_DOMAINS"

value = aws_alb.ALB.dns_name

}]

)

dependsOn = [{

containerName = local.DB_HOST

condition = "START"

}]

mountPoints = [{

sourceVolume = "nextcloud-storage"

containerPath = local.NEXTCLOUD_ENV_VARIABLES["NEXTCLOUD_DATA_DIR"]

readOnly = false

}]

}

We name the container Nextcloud, choose nextcloud:latest image, set it as

essential, export port 80, configure the same logs group as for MariaDB.

Here we will append load balancer DNS name as trusted domain so that Nextcloud

doesn't trigger it's security policy (if you use a domain, you can put it here).

This container is also dependent on the database so we won't start it until the

database is up and running. Next we mount the previously created EFS volume to

/nextcloud directory.

Let's go back for a moment to EFS security group for the mount point. We have to allow traffic from the ECS security group on some ports.

locals {

EFS_SG_PORTS = [ 111, 2049, 2999 ]

}

resource "aws_security_group" "mount-point-sg" {

vpc_id = aws_vpc.My-VPC.id

name = "mount-point-sg"

dynamic "ingress" {

for_each = local.EFS_SG_PORTS

content {

from_port = ingress.value

to_port = ingress.value

protocol = "tcp"

security_groups = [aws_security_group.ECS-SG.id]

}

}

...

}

Now for the last part - starting all of it. Let's define an ECS cluster which will hold our service.

resource "aws_ecs_cluster" "Nextcloud" {

name = "Nextcloud"

}

Next we have to define the service. It will run one task previously defined on

the Nextcloud cluster, in subnets we choose and register the containers in

the target group we created earlier. We have to remember to assign public IP, in

order to be able to pull images from Docker Hub. Otherwise, we have an option to

use Elastic Container Registry via VPC Endpoints or put a NAT gateway. That's

why we had to run the containers in public subnets.

resource "aws_ecs_service" "Nextcloud" {

name = "nextcloud"

cluster = aws_ecs_cluster.Nextcloud.id

task_definition = aws_ecs_task_definition.Nextcloud-Task.arn

desired_count = 1

launch_type = "FARGATE"

force_new_deployment = true # Always redeploy

network_configuration {

subnets = [aws_subnet.public[0].id]

security_groups = [aws_security_group.ECS-SG.id]

assign_public_ip = true

}

load_balancer {

target_group_arn = aws_alb_target_group.ECS-TG.arn

container_name = "Nextcloud"

container_port = local.HTTP_PORT

}

}

Finally, we will force the first deployment.

resource "aws_ecs_task_set" "Nextcloud" {

cluster = aws_ecs_cluster.Nextcloud.id

launch_type = "FARGATE"

task_definition = aws_ecs_task_definition.Nextcloud-Task.arn

service = aws_ecs_service.Nextcloud.id

}

Upon visiting the ALB, we should see the Nextcloud login page.

Fun fact

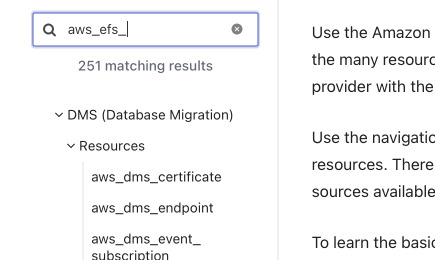

You know it when you search in Terraform provider documentation, it shows things that are unrelated to what you want to filter?

Try typing regex-like: ^aws_efs.* instead of aws_efs to see better results

😉.