AWS Storage Gateway for Home Backups

25 May 2024

World Backup Day is long behind us but it's never too late. I was thinking about methods to backup my data at home. I have some USB disks, old computers that still contain some data - maybe valuable, maybe not.

Usual cloud solutions might be of decent price - both MEGA and iCloud cost 10€ per month for 2TB. This is comparable to S3 Glacier Flexible retrieval. However, if I decided to create rules for moving to Deep Archive, the price goes down quickly - less than 3€ per month for 2TB. What is more I don't have full insight into how is the data handled by user-friendly cloud providers. With AWS I know that I can configure cross-region replication and the data is kept in multiple AZs (except One Zone IA). Nevertheless, in this case I am still limited to a single provider. There are still other problems like privacy, data sovereignty and jurisdiction.

One of the issues with S3 is finding a decent client to interface with it. There are some open-source and commercial providers out there: S3 Explorer, S3 Pro, Cyberduck, Filezilla to name a few. But AWS has its own offerings as well, so why not use one directly from the source? There's clunky S3 Console in the browser. There's AWS CLI. But the most comfortable to use is AWS Storage Gateway. With this solution, S3 buckets are visible as standard NFS or SMB shares.

Today, I will guide you through setting up AWS Storage Gateway and exposing it on the local network - with two prerequisites: a switch/router and a computer with Ethernet port.

My old PCs

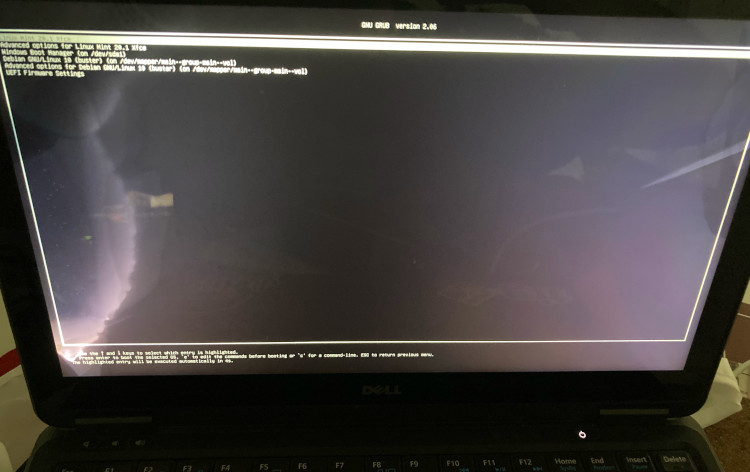

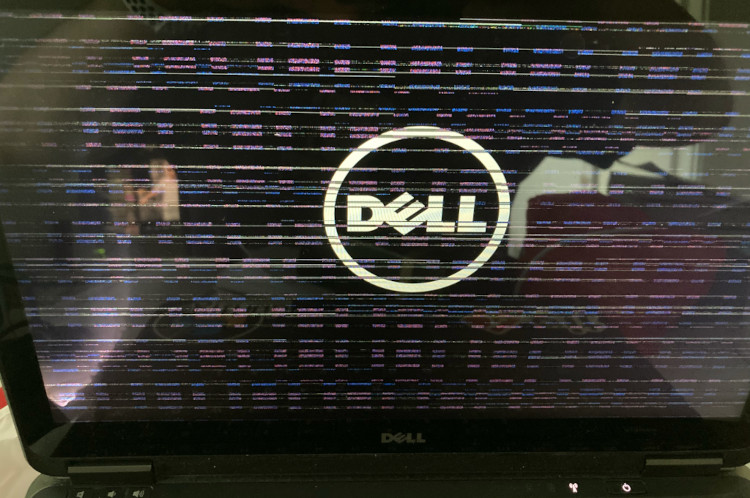

So I have my old Dell laptop and an Intel NUC. Both of them were not touched since a long time. But recently, I booted my laptop. The screen only got worse - both bubbles under the touchscreen and internal LCD layers separating or getting moisture.

Not only that but sometimes the laptop just plain glitched. It happened mostly during boot time. After this glitch the laptop would not boot - it only blinked the HDD LED. I had to unscrew it and pop RAM sticks and SSD out and put it in again. But even after that it started complaining about "Memory misconfiguration". Eventually I got back to Linux.

I tried to create the AWS Storage Gateway VM on this laptop. It wasn't as easy as it sounds but eventually after debugging with Google, I got it working in a strict setup.

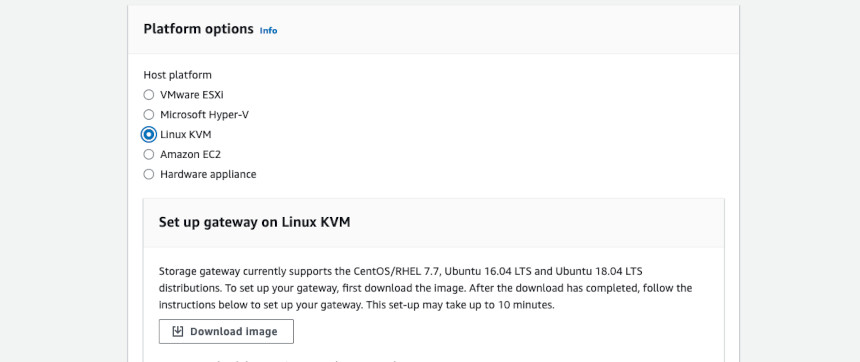

Starting AWS Storage Gateway

As I am just a home Linux user, I don't have any VMWare or Hyper-V setup. Fortunately, AWS offers also a KVM image. To obtain the image, go to AWS Storage Gateway in your AWS Console. Click "Create new gateway". You don't need to fill out anything for now. At the bottom of the page you have a list of supported platforms. Download the image for your hypervisor.

I downloaded it and tried to run it with the XML configuration provided for virsh, even after tweaking the memory, CPU and image location, I couldn't get to the console. But hey, it's a qcow2, so it's just a standard Qemu image.

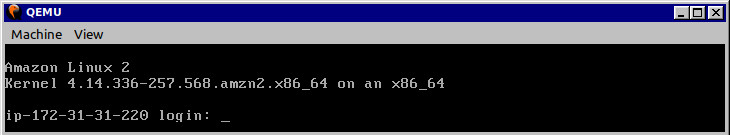

Booting it with VirtualBox didn't work, so I used standard qemu command to run it and viola! I see the login screen in my GTK window. However, this this is not everything. We need to configure networking so that our VM is accessible from the local network. Also, keep in mind that the disk provided by AWS has up to 80GB by default and will grow eventually. So either you have to resize it or make a lot of free space on your host.

$ sudo apt install qemu-system qemu-system-x86 qemu-utils # Ubuntu 20.04 LTS

$ qemu-system-x86_64 -m 4096 -hda prod-us-east-1.qcow2 -accel kvm # Run the VM

Configuring the network

Just booting into the machine might not be enough. It uses NAT on the host by default and I want to be able to access the NFS from my other machines on the local network. To do this, I had to add a bridge interface. I want the easiest setup possible so I will use bridge-utils package.

$ sudo apt install bridge-utils

$ sudo brctl addbr aws0 # Creates a bridge interface aws0

$ sudo brctl addif aws0 wlp2s0 # Adds the physical interface to the bridge

can't add wlp2s0 to bridge aws0: Operation not supported

Oh no! I can't add my WLAN interface as a bridge. Luckily, my laptop has RJ-45, so I can just connect it with a cable. Bridging over WLAN is more work than forwarding ports over NAT, so I will just stick to the cable 😅. I followed this guide to configure the bridge for QEMU. It would be very useful to make the steps below automated, otherwise you will need to repeat them with every system restart.

$ # Create a bridge interface

$ sudo brctl addbr aws0

$ sudo brctl addif aws0 eno1 # Change to your own Ethernet interface

$ # Bring up the interfaces

$ sudo ifconfig eno1 up

$ sudo ifconfig aws0 up

$ # Allow bridged connections traffic

$ sudo iptables -I FORWARD -m physdev --physdev-is-bridged -j ACCEPT

$ sudo dhclient aws0 # I will use my home network's DHCP for the bridge

$ curl https://api.ipify.org # Check if we have public connectivity still

Preparing cache storage

In order to use the File Gateway, you need at least one extra virtual disk that will be used for cache. We will use qemu-img command to create one and then format it to XFS. I don't know if it's necessary, maybe Storage Gateway supports unformatted disks. But it's still good to go through the process to learn more about QEMU 😆.

I will create first 160GB raw image. Next I will "mount" it using qemu-nbd with network block device kernel module on my host - it is needed to create disk devices for QEMU images on the host. It's a lower level step than mounting a disk such as ISO images on a loop device. For convenience, I will use gparted to format the disk.

$ qemu-img create -f qcow2 aws-cache.qcow2 160G

$ sudo modprobe nbd # Load nbd driver on the host

$ sudo qemu-nbd -c /dev/nbd0 aws-cache.qcow2 # Mount

$ sudo gparted /dev/nbd0

First create partition table on the disk: Select Device -> Create partition table. I created classic MBR partition. I don't know if it will work with GPT. Next, create a new partition using Partition menu and select xfs as the format. Apply all changes.

After finished work in GParted, remember to unmount any disks that could be automatically mounted and delete the nbd device.

$ sudo qemu-nbd -d /dev/nbd0

Running the Gateway

Next we can start our Storage Gateway VM with QEMU. I gave my VM 8GB of RAM as 4-16 is recommended. The MAC address can be almost anything unique. MACs starting 52:54:00 are locally administered devices, similar to private IP addresses in layer 3. What is also useful is to set KVM acceleration for QEMU and set at least 2 vCPUs. Even with these settings it might take a while for the VM to be ready.

$ qemu-system-x86_64 -m 8192 \

-hda prod-us-east-1.qcow2 \

-hdb aws-cache.qcow2 \

-net nic,model=virtio,macaddr=52:54:00:88:99:11 \

-net bridge,br=aws0 \

-smp 2 \

-accel kvm

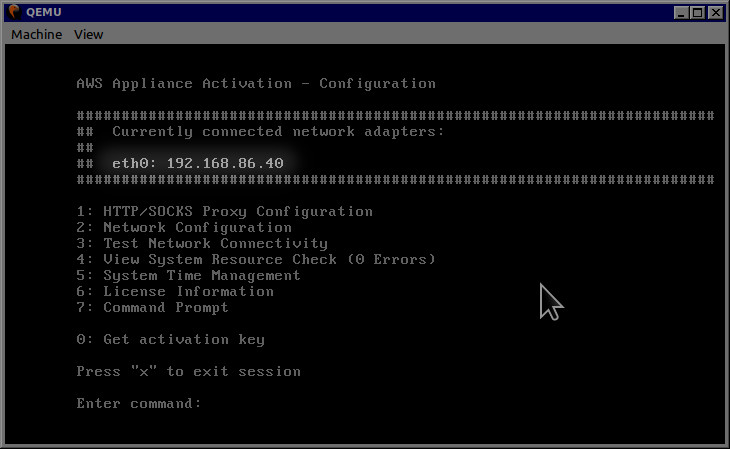

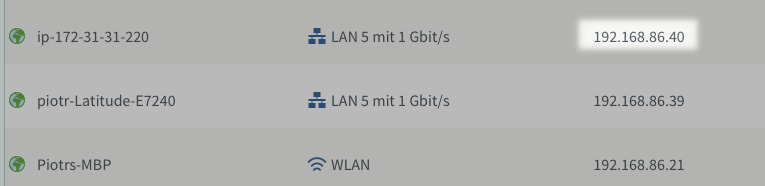

Once your VM is started you have to log in. Use admin, password credentials. You should be greeted with the AWS Storage Gateway configuration wizard. I let myself compare the IP that is seen by the VM and what is registered in my router. The bridge connection is working!

You see multiple options in the configuration screen. You can test the connectivity first by selecting the appropriate option. To start the real work, press 0 to get the activation key. Fill the values which are needed for your gateway. For most use cases it will be just 1 each time, except the region (for me it is eu-west-1). You should get the activation key.

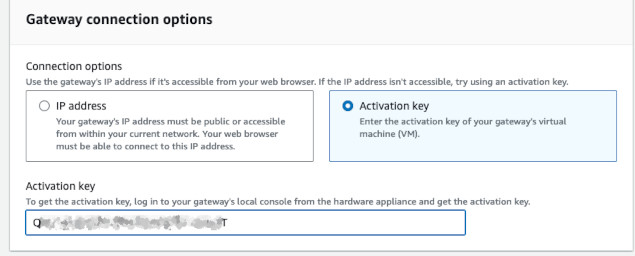

Now, go back to AWS. Create a new gateway. Fill all the information for your gateway - name, timezone and select File Gateway. On the second page, in connection options, select Activation key and type the key you got from the VM. It might take time to activate the gateway.

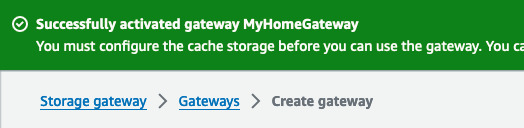

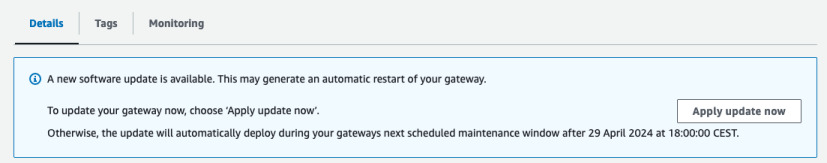

We should be greeted with a success message that our gateway is activated. We can proceed to some more configuration required for the file gateway. Also there might be some updates to install. My image was very outdated so just let it install using "Apply immediately" before proceeding.

Configuring cache

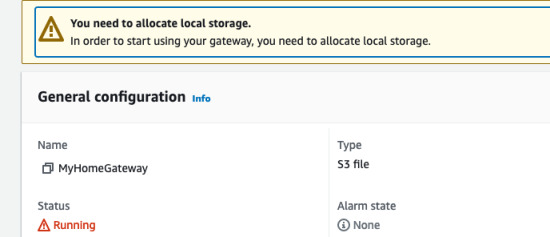

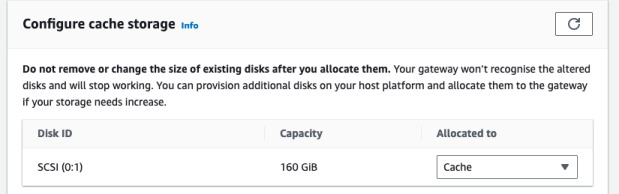

As we did in some previous section, we created another virtual disk that is supposed to be used as gateway's cache. Now we need to specify it in AWS so that it can start using it. Go to Actions -> Configure cache storage. Select what is available.

Creating a bucket and a share

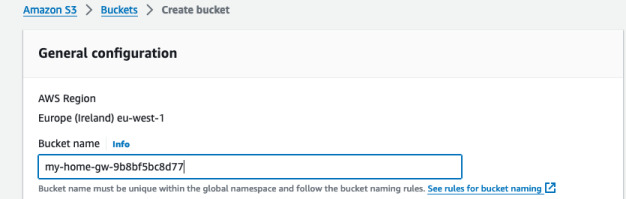

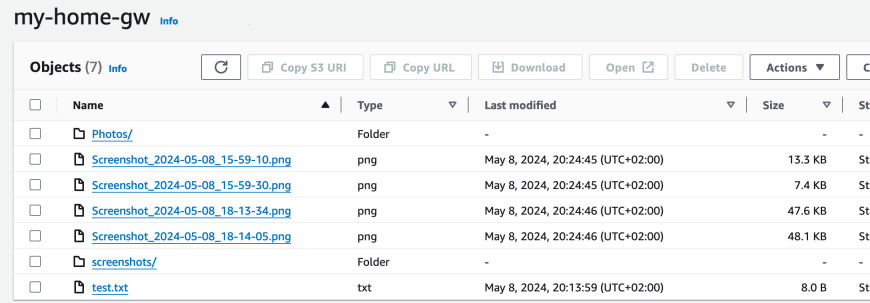

Let's go to our S3 console and create a bucket. Leave all the settings as default but choose some unique name. Keep the region the same as your Storage Gateway is located. In this bucket all the files we share via Storage Gateway will be reflected.

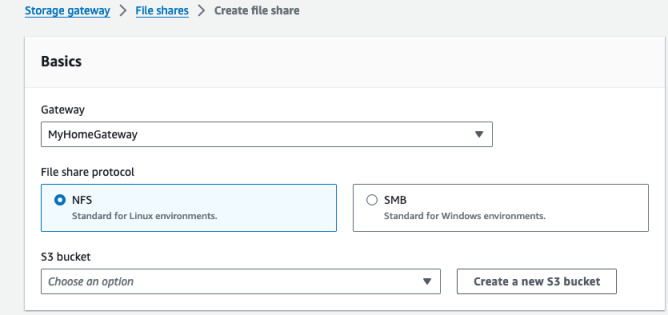

Next go to Storage Gateway console and File shares on the left. Create a new NFS share with the specified gateway and bucket. I then edited the share access settings and set the allowed clients to my home network subnet, that is 192.168.86.0/24.

After the share is created, you can look into its details. At the bottom of the page there are example commands for mounting the share from the local network. As of May 2024, the Mac command has a redundant space in options -o near nolock so remember to remove it 😅. And change below IP to the one of your gateway.

$ # On Linux

$ sudo mkdir /mnt/share

$ sudo mount -t nfs \

-o nolock,hard \

192.168.86.40:/my-home-gw-123456 \

/mnt/share

$ # Or on Mac

$ mkdir ~/mnt/share

$ sudo mount -t nfs \

-o vers=3,rsize=1048576,wsize=1048576,hard,nolock \

-v 192.168.86.40:/my-home-gw-12345 \

~/mnt/share

If you can't connect, its useful to use rpcinfo for troubleshooting. Use it to get information about the RPC services (NFS being one of them). You should see something running on port 2049. If it's not visible, it can be a problem with your gateway configuration. If you see it and still can't mount or rpcinfo does not respond, check firewall.

$ rpcinfo -p 192.168.86.40

program vers proto port

100000 4 tcp 111 rpcbind

...

100003 3 udp 2049 nfs

100003 3 tcp 2049 nfs

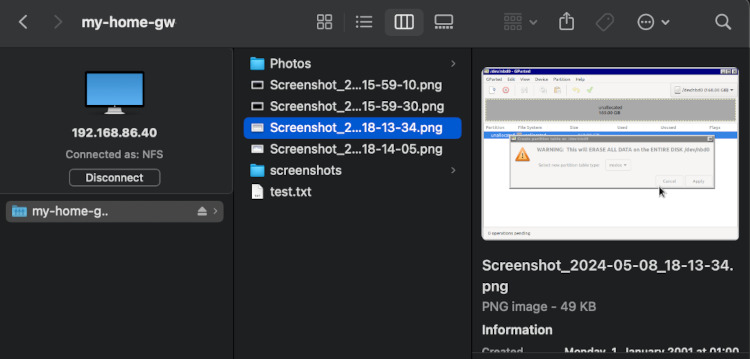

Once the filesystem is mounted I can see the files that are in the bucket in Finder. I can copy new files with ease and synchronize between different devices and the cloud. This gives me a way to not only backup but also process the files on my Mac with low latency as they are cached on the local network on the gateway.

The uploads indeed have some latency. Once I copied the file from Finder, it took a good 10 seconds to eventually appear in S3.

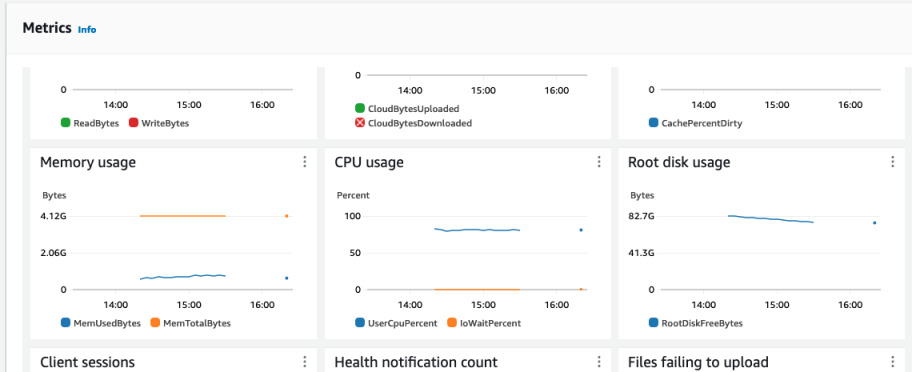

Gateway maintenance

All the maintenance of the gateway is done through AWS console, CLI or API. There's not much you are able to do using the gateway's terminal (unless you mounth the disk on the side). You can perform updates remotely and monitor the metrics that are available in the gateway's Monitoring tab in AWS console.