Why you should use AppConfig

16 April 2025

We all know that we should store our configuration in Git. But I have seen very often, that the config is kept together with the code. Why is it a problem? If you want to publish a new configuration, you have to rebuild and restart the whole app (unless you have a good CI/CD caching strategy). In either case, it's better when you are able to quickly change the settings. Therefore, today I want to talk with you about possibilities to store the configuration. In this blog post I will first create an example infrastructure and application and then we can focus on the settings for this app.

Traditional approaches

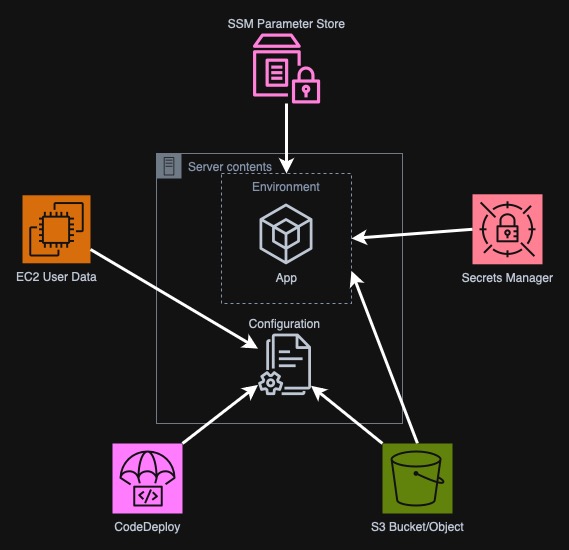

As I have already mentioned, normally the configuration is kept in the

repository with code. Other often used approach is to configure the app using

environment variables. In AWS world these are often Systems Manager Parameter

Store, Secrets Manager, S3 Objects, EC2 User Data or CodeDeploy. The first two

simply keep the values for env variables. In S3 we can either save any freeform

configuration or store an .env file, that integrates very well with AWS. EC2

User Data and CodeDeploy can just change the application during either instance

start or on new deployment by creating or downloading a new environment file.

Of course there are other approaches such as databases (DynamoDB, RDS), file

storages (EFS, FSx), etc. Databases are often the standard way but you need to

program the logic to load configuration from them. Other file storages will

function similar to User Data, CodeDeploy or S3 - you need to restart the app

or program a logic to reload it dynamically. We will focus only on the first

three approaches that easily create environment variables in AWS Elastic

Container Service.

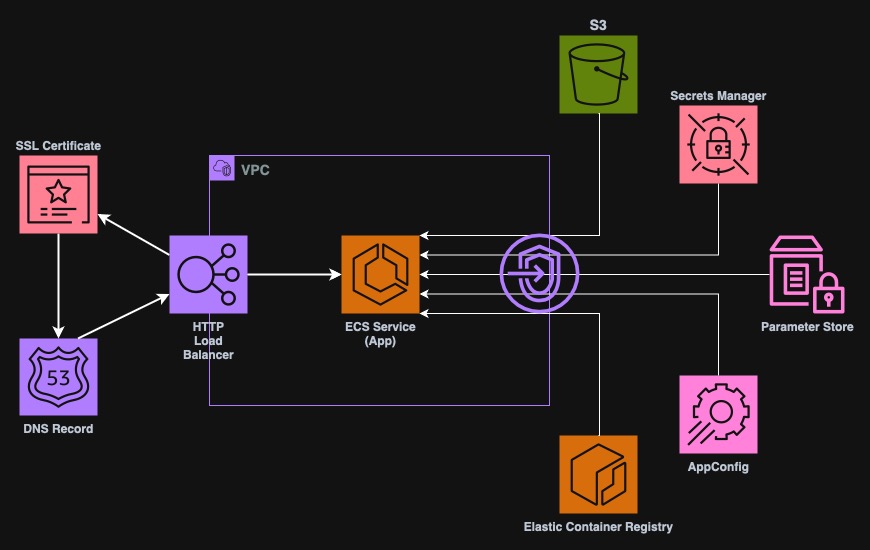

Base infrastructure

Before we can create an app, we need some infrastructure with all the necessary resources. In this repository you can find all the structures. The image below presents all the parts of the infra. We have here a VPC with all the subnets, security groups (not in the picture). The load balancer and Route53 domains allow us to access the application from the Internet. SSL certificates are created with ACM so that we can use secure connection. The app itself will run in an ECS Cluster that is currently empty. However, as I plan to place the application in a private subnet, we will need to also add some VPC Endpoints to give access to AWS Services (this is the expensive part, remember to tear down infra in case you are testing this project.)

I assume that you have your own domain and that the zone is already managed by AWS Route53. If not, you will need to perform some changes, such as removing ACM certificate, creating a plaintext HTTP listener and so on.

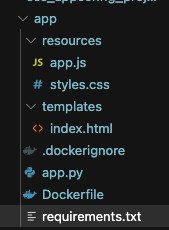

We build the app

As we already have the entire infrastructure ready, we can finally build the

app. It will be a simple Python web app that only displays the environment

variable values. FastAPI is a perfect choice for this. Of course we need to

pack this app into a Docker image. I (or rather Claude) have created a file

called app.py in a new app directory. There are also two other directories,

namely templates and resources that hold HTML templates, and CSS and JS

files respectively. I have also added requirements.txt with all the libraries

we have to install and a Dockerfile. You can find all the files in the Git

repository under

appconfig-example/79e65b7/app.

However, let's focus on app.py for now, which is the file that contains all

the logic and usage of the features described in this post.

from fastapi import FastAPI, Request

from fastapi.responses import HTMLResponse, JSONResponse

from fastapi.templating import Jinja2Templates

from fastapi.staticfiles import StaticFiles

import os

from datetime import datetime

app = FastAPI()

# Mount static files directory

app.mount("/static", StaticFiles(directory="resources"), name="static")

templates = Jinja2Templates(directory="templates")

@app.get("/", response_class=HTMLResponse)

async def read_env_variables(request: Request):

return templates.TemplateResponse(

"index.html",

{

"request": request,

"ssm_parameter": os.getenv("SSM_PARAMETER", "Not Set"),

"ssm_secret_parameter": os.getenv("SSM_SECRET_PARAMETER", "Not Set"),

"s3_env_parameter": os.getenv("S3_ENV_PARAMETER", "Not Set"),

"secrets_manager_parameter": os.getenv("SECRETS_MANAGER_PARAMETER", "Not Set"),

"now": datetime.now

}

)

@app.get("/refresh")

async def get_env_variables():

return JSONResponse({

"ssm_parameter": os.getenv("SSM_PARAMETER", "Not Set"),

"ssm_secret_parameter": os.getenv("SSM_SECRET_PARAMETER", "Not Set"),

"s3_env_parameter": os.getenv("S3_ENV_PARAMETER", "Not Set"),

"secrets_manager_parameter": os.getenv("SECRETS_MANAGER_PARAMETER", "Not Set"),

"last_refresh": datetime.now().strftime("%Y-%m-%d %H:%M:%S")

})

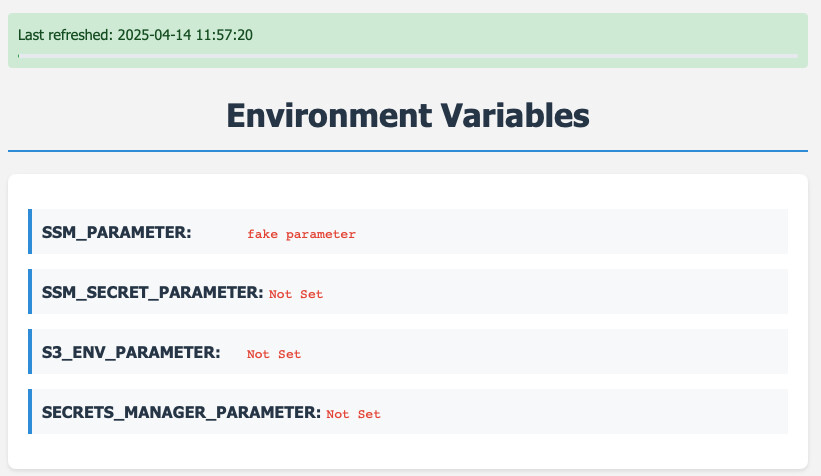

We have here three endpoints. First is the root main page, that simply shows

formatted HTML with all the environment variables we have listed here. If they

are not present it should simply show Not Set. The second endpoint /refresh

simply returns the same values but as JSON that we can use for dynamic

reloading. And lastly, we have /static/ directory that will serve JS and CSS

files to make the app more testable and readable. With each web request, the

variables should be reloaded from the system's environment. First, let's run

it locally to see how it goes.

$ docker build -t ppabis/appconfig-example .

$ docker run --rm -it \

-p 8080:8080 \

-e SSM_PARAMETER="fake parameter" \

ppabis/appconfig-example

INFO: Started server process [1]

INFO: Waiting for application startup.

INFO: Application startup complete.

INFO: Uvicorn running on http://0.0.0.0:8080 (Press CTRL+C to quit)

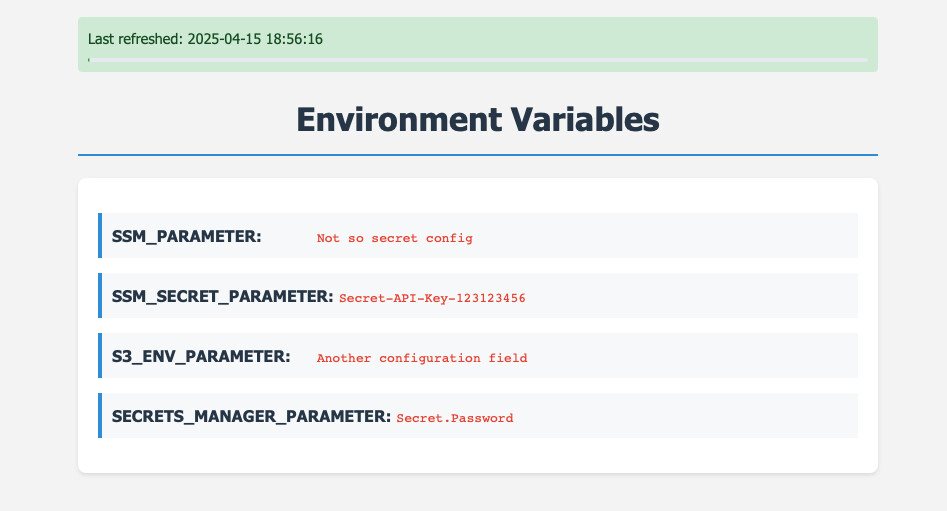

Great, the app starts, we see the page and one of the environment variables is loaded. Let's start by defining the configuration in the AWS services and then running the app in the ECS Cluster.

Configuration Options

We create some example configurations. They will be kept in SSM Parameter Store, AWS Secrets Manager and and S3 bucket. Here is an example in Terraform.

### SSM Parameter Store

resource "aws_ssm_parameter" "ssm_parameter" {

name = "/appconfig_demo/ssm_parameter"

type = "String"

value = "Not so secret config"

}

resource "aws_ssm_parameter" "secure_string_parameter" {

name = "/appconfig_demo/secure_string_parameter"

type = "SecureString"

value = "Secret-API-Key-123123456"

}

### Secrets Manager

resource "aws_secretsmanager_secret" "ssm_secret_parameter" {

name = "appconfig_demo_secret"

}

resource "aws_secretsmanager_secret_version" "ssm_secret_parameter_version" {

secret_id = aws_secretsmanager_secret.ssm_secret_parameter.id

secret_string = "Secret.Password"

}

### S3 Environment File

resource "aws_s3_bucket" "s3_bucket" {

bucket = "my-s3-bucket-with-configuration"

}

resource "aws_s3_bucket_object" "environment_file" {

bucket = aws_s3_bucket.s3_bucket.bucket

key = "parameters.env"

content = <<-EOF

S3_ENV_PARAMETER="Another configuration field"

EOF

}

We have here four types of configurations. First we can store a simple parameter

in Systems Manager that is not a secret. But another option is to store a

password or an API key with SecureString in SSM or a as a secret in Secrets

Manager. Secrets Manager has more advantages, like password rotation but also

costs more. Another very convenient option is an S3 bucket where we can store a

whole list of configuration values - but this is a bit harder to make secure.

All these values will be delivered to the app as environment variables.

Uploading the app to ECR

Before we can run the app on ECS, we have to have it in Elastic Container

Registry. I created a full Terraform pipeline that builds the Docker image and

uploads it to ECR. The kreuzwerker/docker provider is configured dynamically

and uses a fresh authorization token from AWS. Changing the image_tag variable

triggers a new build and push.

# Create repository

resource "aws_ecr_repository" "application_repository" {

name = "appconfig-demo"

tags = { Name = "appconfig-demo" }

force_delete = true

}

# Build the image

resource "docker_image" "application_image" {

depends_on = [aws_ecr_repository.application_repository]

name = "${aws_ecr_repository.application_repository.repository_url}:${var.image_tag}"

keep_locally = true

build {

context = "app"

dockerfile = "Dockerfile"

}

}

# Authenticate to ECR

data "aws_caller_identity" "current" {}

data "aws_region" "current" {}

data "aws_ecr_authorization_token" "token" {

registry_id = "${data.aws_caller_identity.current.account_id}.dkr.ecr.${data.aws_region.current.name}.amazonaws.com"

}

provider "docker" {

registry_auth {

address = "${data.aws_caller_identity.current.account_id}.dkr.ecr.${data.aws_region.current.name}.amazonaws.com"

username = data.aws_ecr_authorization_token.token.user_name

password = data.aws_ecr_authorization_token.token.password

}

}

# Push to ECR

resource "docker_registry_image" "application_image" {

depends_on = [docker_image.application_image]

name = "${aws_ecr_repository.application_repository.repository_url}:${var.image_tag}"

keep_remotely = true

}

Running the app on ECS

As we have the app in ECR already, we can now pull it from ECS. For simplicity,

in the code I used a module terraform-aws-modules/ecs. It not only creates a

cluster but also the service, task definition, IAM roles, and so on. This module

can get pretty deeply nested but let's go through all the parts.

module "ecs" {

source = "terraform-aws-modules/ecs/aws"

version = "5.12.0"

cluster_name = "appconfig-demo"

services = {

appconfig-demo = {

cpu = 512

memory = 1024

name = "appconfig-demo"

subnet_ids = data.aws_subnets.private_subnets.ids

runtime_platform = {

cpu_architecture = "ARM64"

operating_system_family = "LINUX"

}

security_group_rules = {

alb_ingress_8080 = {

type = "ingress"

from_port = 8080

to_port = 8080

protocol = "tcp"

description = "Service port"

source_security_group_id = data.aws_security_group.alb_sg.id

}

egress_all = {

type = "egress"

from_port = 0

to_port = 0

protocol = "-1"

cidr_blocks = ["0.0.0.0/0"]

}

}

load_balancer = {

service = {

target_group_arn = data.aws_alb_target_group.target_group.arn

container_name = "app"

container_port = 8080

}

}

container_definitions = {

app = {

image = "${data.aws_ecr_repository.application_repository.repository_url}:${var.image_tag}"

essential = true

port_mappings = [

{

name = "app"

containerPort = 8080

protocol = "tcp"

}

]

secrets = [

{

name = "SSM_PARAMETER"

valueFrom = data.aws_ssm_parameter.ssm_parameter.arn

},

{

name = "SSM_SECRET_PARAMETER"

valueFrom = data.aws_ssm_parameter.secure_string_parameter.arn

},

{

name = "SECRETS_MANAGER_PARAMETER"

valueFrom = tolist(data.aws_secretsmanager_secrets.secrets.arns)[0]

}

]

environment_files = [

{

type = "s3"

value = var.env_file_s3_arn

}

]

} # end of app =

} # end of container_definitions

} # end of appconfig-demo =

} # end of services

} # end of module.ecs

In the services block, we define all the needed services. We will start only

one service here called appconfig-demo. We define the standard values such as

CPU, memory, name and runtime. As I am building the image on Mac M1, I need to

use ARM64. If you use Linux or Windows on a ThinkPad or Dell, you will

probably need to change this to X86_64. Then we need to select subnets in

which the service will run. I used a data source to get all private subnets

created in the vpc module. Then we create a new security group - I allow here

all the traffic out and just port 8080 in from the load balancer. Speaking of

load balancer, we need to also define with which target group our services has

to be associated with when deploying and scaling in or out.

Finally, we get to the container definitions. Here I use also data source to

find the ECR repository with our app and use the tag defined in a variable. We

also need to expose port 8080 and then we can set up all the environment

variables. Here I use secrets block to define the values from SSM Parameter

Store, Secrets Manager and environment_files block to define the S3 path to

the environment file. What is important is that the path to S3 is in the

following format arn:aws:s3:::bucket-name/path/to/file.env. Each environment

file must end with .env. After we build our infra now, we should have the

service deployed behind a load balancer.

Creating configuration in AppConfig

AWS AppConfig is another service that allows us to store configuration for our application. What is special about this service is that is designed to:

- version the configurations,

- roll it out gradually or rollback,

- create filters that can be used to create conditions for the configuration (for example only specific country sees the new feature),

- create validators using Lambda,

- include the local AppConfig Agent that can be used to retrieve and cache the config so that we don't pay for too many requests.

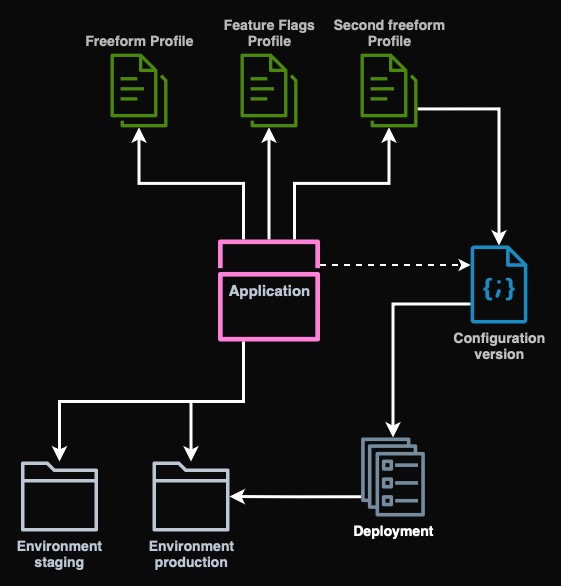

Each configuration consists of several parts. First is the application. This is a top-level container. Then we have environment which can be used to create separate steps or types of deployments such as production, staging, desktop, mobile, etc.

Another thing is configuration profile - this is somewhat similar to schema in a

database. It comes in two flavors - feature flags or freeform. Feature flags

require a specific structure and freeform, as the name suggests, is

unstructured. Here we also define the location of the configuration and the

validators in Lambda. I will use only hosted configuration type (so that we

don't have to deal with SSM or S3) and no validators.

Next we have configuration version that contains the actual content and content type of the configuration such as JSON, YAML or JSON for Feature Flags type. Each such configuration is deployed either all at once or gradually to an environment.

Below I present you the diagram how the parts are related to each other.

We will define in Terraform all of the mentioned parts. I will use hosted

profile, a freeform YAML configuration and deploy it all at once to live

environment.

resource "aws_appconfig_application" "appconfig_application" {

name = "appconfig-demo"

}

resource "aws_appconfig_environment" "live" {

name = "live"

application_id = aws_appconfig_application.appconfig_application.id

}

resource "aws_appconfig_configuration_profile" "prof" {

application_id = aws_appconfig_application.appconfig_application.id

name = "main"

location_uri = "hosted"

description = "Example profile"

}

resource "aws_appconfig_hosted_configuration_version" "live_main" {

application_id = aws_appconfig_application.appconfig_application.id

configuration_profile_id = aws_appconfig_configuration_profile.prof.configuration_profile_id

content_type = "application/yaml"

content = <<-EOF

background: '#1145cd'

EOF

}

resource "aws_appconfig_deployment" "live_main" {

application_id = aws_appconfig_application.appconfig_application.id

configuration_profile_id = aws_appconfig_configuration_profile.prof.configuration_profile_id

configuration_version = aws_appconfig_hosted_configuration_version.live_main.version_number

environment_id = aws_appconfig_environment.live.environment_id

deployment_strategy_id = "AppConfig.AllAtOnce"

description = "Deployment of live environment"

}

Connecting our application to AppConfig

I will use the AppConfig Agent sidecar container in ECS because it is much

easier to use than AWS APIs. It also has built in caching and is exposed on port

2772 with a super easy to use HTTP API. We just need to install requests to

our container and we are almost ready to go. As I use YAML configuration

(everybody knows it's superior to JSON), I will also need the PyYaml package.

In the new file config.py, I created the following two functions. get_config

will try to retrieve the configuration from the AppConfig Agent. In case of

problems an exception will be raised. The other function get_value will try

to retrieve the configuration field (assuming that the config is a dictionary)

and in case of any problems, the default value will be returned back. At the

top I defined variables where you specify application, environment and profile

names, the same as in Terraform above.

import requests, yaml

APPLICATION="appconfig-demo"

ENVIRONMENT="live"

PROFILE="main"

def get_value(key, default):

try:

c = get_config()

except Exception as e:

print(f"Error: {e}")

return default

if not isinstance(c, dict):

return default

if key in c:

return c[key]

return default

def get_config():

req = requests.get(f"http://localhost:2772/applications/{APPLICATION}/environments/{ENVIRONMENT}/configurations/{PROFILE}")

if req.status_code >= 200 and req.status_code < 300:

if req.headers.get("Content-Type") == "application/yaml":

return yaml.safe_load(req.text)

else:

return req.text

else:

raise Exception(f"{req.status_code}: {req.text}")

Now I want to add the values to the main page. In the app.py file I add the

import of get_value and add the background to the functions that return the

configuration to the application.

...

from config import get_value, get_feature_flag

@app.get("/", response_class=HTMLResponse)

async def read_env_variables(request: Request):

return templates.TemplateResponse(

"index.html",

{

"request": request,

"background": str(get_value("background", "#e3ffe3")),

...

}

)

@app.get("/refresh")

async def get_env_variables():

return JSONResponse({

"background": str(get_value("background", "#e3ffe3")),

"ssm_parameter": os.getenv("SSM_PARAMETER", "Not Set"),

...

})

However, our application will still not work. We need sidecar container and also

the IAM permissions for AppConfig. Because we placed our app in a private

subnet, we will also need to add VPC Endpoint for AppConfig and create a private

ECR repository to copy over the AppConfig Agent image. I will again use docker

provider to push the image to a new ECR repo.

resource "aws_ecr_repository" "appconfig_agent_repository" {

name = "appconfig-agent"

force_delete = true

}

resource "docker_image" "appconfig_agent_image" {

name = "public.ecr.aws/aws-appconfig/aws-appconfig-agent:2.x"

}

resource "docker_tag" "appconfig_agent_image_tag" {

source_image = docker_image.appconfig_agent_image.image_id

target_image = "${aws_ecr_repository.appconfig_agent_repository.repository_url}:latest"

}

resource "docker_registry_image" "appconfig_agent_image" {

depends_on = [docker_tag.appconfig_agent_image_tag]

name = "${aws_ecr_repository.appconfig_agent_repository.repository_url}:latest"

keep_remotely = true

}

Now I will add the container to our ecs module. Also below the module, I add

some extra IAM permissions to the task role, so that it is allowed to access

AppConfig service, because it is not included in the module by default.

module "ecs" {

...

container_definitions = {

app = {

image = "${data.aws_ecr_repository.application_repository.repository_url}:${var.image_tag}"

...

} # end of app =

agent = {

image = "${data.aws_ecr_repository.appconfig_agent_repository.repository_url}:latest"

essential = true

port_mappings = [

{

name = "agent"

containerPort = 2772

}

]

} # end of agent =

}

...

}

# Add some permissions

data "aws_iam_policy_document" "appconfig_agent_policy" {

statement {

actions = [

"appconfig:StartConfigurationSession",

"appconfig:GetLatestConfiguration",

"appconfig:GetConfiguration",

"appconfig:GetConfigurationProfile",

]

resources = ["*"]

}

}

resource "aws_iam_role_policy" "appconfig_agent_policy" {

name = "AppConfigECSAgentPolicy"

policy = data.aws_iam_policy_document.appconfig_agent_policy.json

role = module.ecs.services["appconfig-demo"].tasks_iam_role_name

}

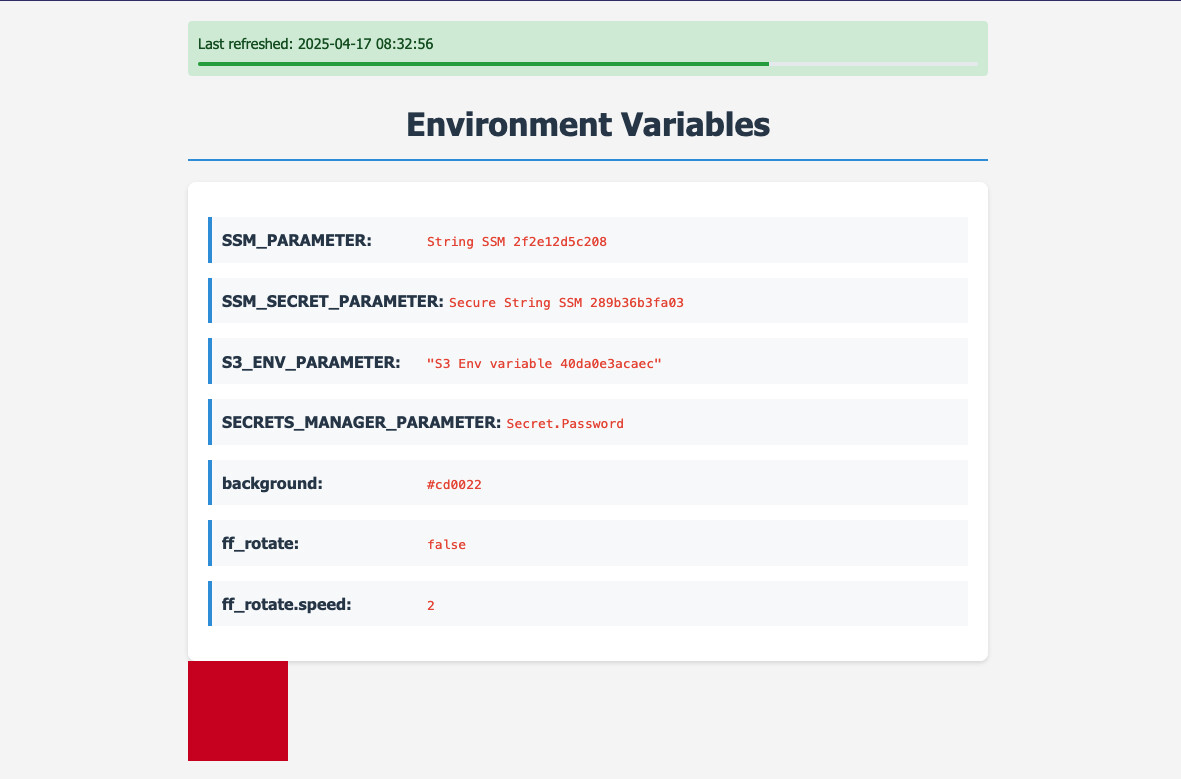

Now we can deploy the application into ECS. I also added some modifications to

the HTML page so that it displays the background value and a new square that

should change the color of the background. I also implemented this functionality

in JavaScript to refresh it dynamically.

Feature flags

Although AppConfig has a lot of different options to use and it would take a lot of time to describe and try everything, in this post I want to also show you how to use Feature Flags feature. We need to define another profile and provide a specific configuration version in JSON.

resource "aws_appconfig_configuration_profile" "featureflags" {

application_id = aws_appconfig_application.appconfig_application.id

name = "featureflags"

location_uri = "hosted"

description = "Feature flags example"

type = "AWS.AppConfig.FeatureFlags"

}

resource "aws_appconfig_hosted_configuration_version" "featureflags" {

application_id = aws_appconfig_application.appconfig_application.id

configuration_profile_id = aws_appconfig_configuration_profile.featureflags.configuration_profile_id

content_type = "application/json"

content = jsonencode({

flags: {

ff_rotate: {

name: "ff_rotate",

attributes: {

speed: {

constraints: {

type: "number",

required: true

}

}

}

}

},

values: {

ff_rotate: {

enabled: true,

speed: 10

}

},

version : "1"

})

}

resource "aws_appconfig_deployment" "featureflags" {

application_id = aws_appconfig_application.appconfig_application.id

configuration_profile_id = aws_appconfig_configuration_profile.featureflags.configuration_profile_id

configuration_version = aws_appconfig_hosted_configuration_version.featureflags.version_number

environment_id = aws_appconfig_environment.live.environment_id

deployment_strategy_id = "AppConfig.AllAtOnce"

description = "Deployment of feature flags to live"

}

Here I have created a configuration that contains a flag called ff_rotate. It

does not have any conditions, just a simple attribute of speed of a number

type. However, in the application I will only use the enabled/disabled flag that

is always present. Using the attribute from this feature flag is an exercise for

the reader 😉.

Configuration changes

I will now change the configuration in all the services: SSM, Secrets Manager and AppConfig. First we will try changing SSM Parameters and a secret.

We see that no changes are reflected. Even though the application tried to reload the configuration from the server, there were no actual changes. Let's continue by changing AppConfig configuration while we wait if anything happens to the SSM parameters or Secrets Manager secret.

As we see here, all the changes are now visible. But for the parameters and secrets to be reloaded we had to restart the container. This not only takes time but also can break some connections or lose data in case our application is stateful (such as multiplayer game server). Thanks to AppConfig, we can easily change the configuration without restarting.

Closing

We went through some of the most important parts of AppConfig. However, as mentioned this service is very advanced and has a lot more features. I'm planning to guide you through some of them in a future post. We will cover validation, import from SSM, S3 and secrets manager, events extensions and conditional feature flags.