RDS Blue/Green Deployments while using IaC

1 July 2025

Today, we are going to explore a way to perform a blue/green deployment of an RDS database (MySQL and PostgreSQL) when all the infrastructure is created from CloudFormation and Terraform. You might ask yourself, why even bother to do a blue/green deployment of a database? The answer is very, very simple:

- you can test the new version of an engine for an indefinite time,

- all the data is replicated,

- the switchover has minimal downtime and uses the same endpoints,

- it is not difficult in AWS RDS, dare to say straightforward.

We will start by creating a base infrastructure with a VPC, subnets, security groups and an EC2 instance that we will use for some experiments later. I will also create a secret containing the password for the databases. The infrastructure will look like this:

In the EC2 instance that is in public subnet, I will install some tools like PostgreSQL and MariaDB clients and Docker for the application that we will implement shortly. I have implemented this part in Terraform and you can find it in the repository here: vpc.

Creating the databases

In a separate directory, I have created Terraform code to create PostgreSQL on

RDS in version 13. I have defined also variables that we have to provide from

the vpc module. The full code is in

postgresql directory.

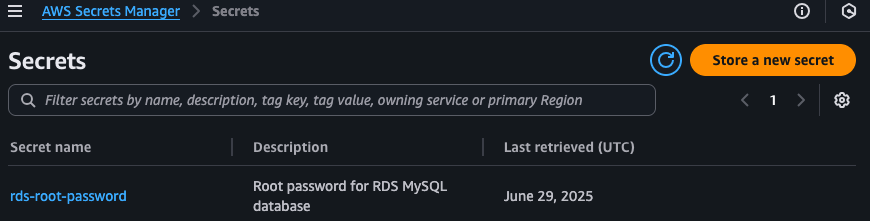

I will take the password from Secrets Manager's Secret I have created in the VPC

part. You can't use manage_master_user_password because such configuration is

not supported for blue/green deployments in RDS.

data "aws_secretsmanager_secret" "root_password" {

name = "rds-root-password"

}

data "aws_secretsmanager_secret_version" "root_password" {

secret_id = data.aws_secretsmanager_secret.root_password.id

}

resource "aws_db_subnet_group" "rds" {

name = "postgresql-database-subnet-group"

description = "Subnet group for RDS PostgreSQL database"

subnet_ids = var.subnet_ids

}

resource "aws_db_instance" "postgresql" {

identifier = "postgresql-database"

# Database configuration

db_name = "postgresql_database"

engine = "postgres"

engine_version = "13.21"

# Instance configuration

instance_class = "db.t4g.medium"

allocated_storage = 20

storage_type = "gp3"

storage_encrypted = true

# Network configuration

vpc_security_group_ids = [var.security_group_id]

db_subnet_group_name = aws_db_subnet_group.rds.name

publicly_accessible = false

multi_az = false

# Authentication

username = "root"

password = jsondecode(data.aws_secretsmanager_secret_version.root_password.secret_string).password

# Backup and maintenance

backup_retention_period = 1

deletion_protection = false

auto_minor_version_upgrade = false

skip_final_snapshot = true

}

To configure it completely, in postgresql directory create a file called

terraform.tfvars with the following content (based on applied VPC part, use

tofu output to get the values). Alternatively, you can combine the two

directories as modules and pass outputs directly.

# Output from the VPC module

# postgresql_sg_id = "sg-0789abcd123456789"

# private_subnet_ids = [

# "subnet-00112233445566778",

# "subnet-0ef12345678901234",

# ]

secret_name = "rds-root-password"

security_group_id = "sg-0789abcd123456789"

subnet_ids = [

"subnet-00112233445566778",

"subnet-0ef12345678901234",

]

Apply it to create the database. Similarly I have created a MySQL RDS database

in version 8.0 using CloudFormation. I will provide also the security group and

the subnet IDs from the VPC module. I will use AWS CLI to deploy the template.

You can also create a Terraform resource that will deploy this CloudFormation

template and get the vpc module outputs as parameters directly.

AWSTemplateFormatVersion: '2010-09-09'

Description: 'RDS MySQL 8.0 Database with managed password'

Parameters:

SecurityGroupId:

Type: AWS::EC2::SecurityGroup::Id

SubnetIds:

Type: List<AWS::EC2::Subnet::Id>

SecretName:

Type: String

Resources:

RDSInstance:

Type: AWS::RDS::DBInstance

Properties:

DBInstanceIdentifier: mysql-database

DBName: my_database

Engine: mysql

EngineVersion: '8.0.33'

AutoMinorVersionUpgrade: false

DBInstanceClass: db.t4g.medium

AllocatedStorage: 20

StorageType: gp3

StorageEncrypted: true

BackupRetentionPeriod: 1

MultiAZ: false

DBSubnetGroupName: !Ref RDSSubnetGroup

PubliclyAccessible: false

VPCSecurityGroups:

- !Ref SecurityGroupId

MasterUsername: root

MasterUserPassword: !Sub '{{resolve:secretsmanager:${SecretName}:SecretString:password}}'

DeletionProtection: false

RDSSubnetGroup:

Type: AWS::RDS::DBSubnetGroup

Properties:

SubnetIds: !Ref SubnetIds

cd mysql/

AWS_DEFAULT_REGION=eu-west-2

SECURITY_GROUP=$(tofu -chdir=../vpc output -raw mysql_sg_id)

SUBNET_IDS=$(tofu -chdir=../vpc output -json private_subnet_ids | jq -r 'join(",")')

aws cloudformation deploy \

--template-file mysql.yaml \

--stack-name mysql-database \

--parameter-overrides SecurityGroupId=$SECURITY_GROUP SubnetIds=$SUBNET_IDS SecretName=rds-root-password

After you create both databases, you can retrieve the passwords for the root user from Secrets Manager. We will use them to monitor the databases and check if the replication works correctly. You can do it via AWS Console or CLI.

aws secretsmanager get-secret-value \

--secret-id $(tofu output -chdir=../vpc -raw root_password_arn) \

--query SecretString \

--output text \

| jq -r ".password"

The application to check the database

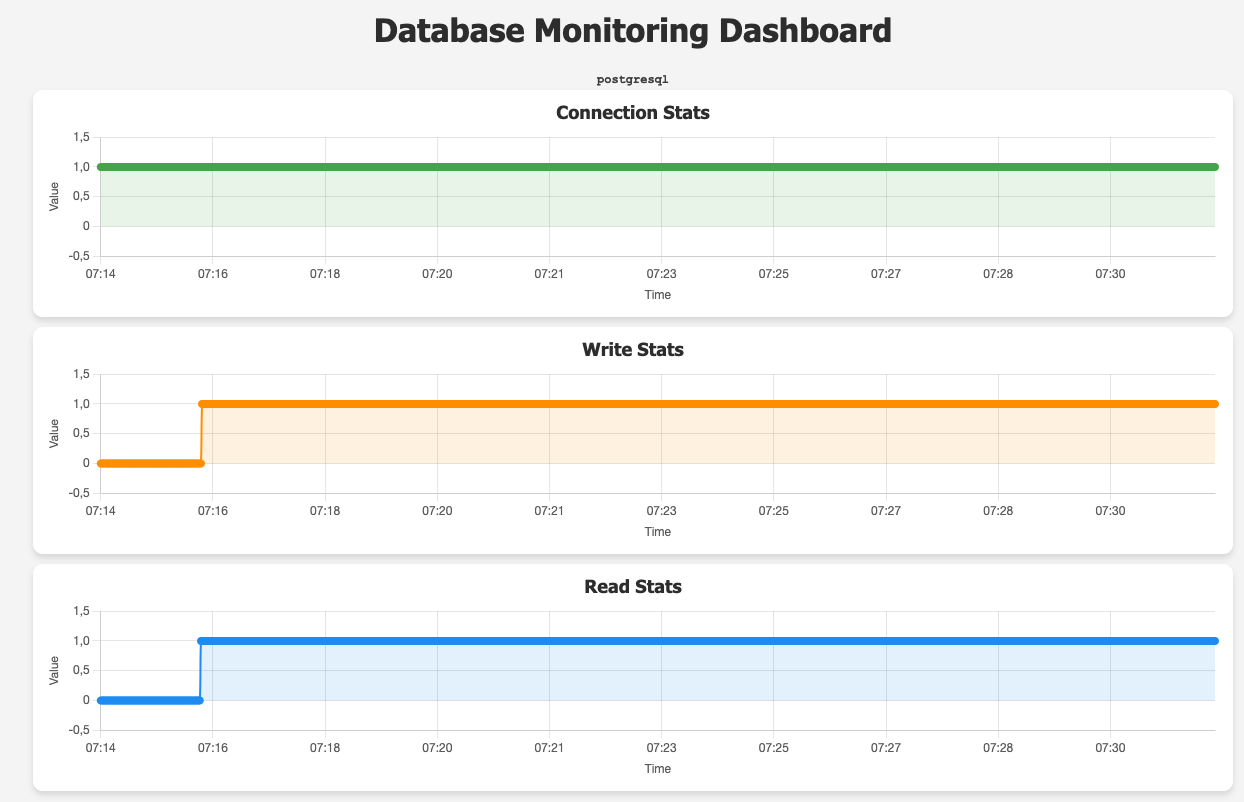

Before we just jump to modify the databases, I will create a simple application that will use the database constantly. Every second or so it will write a record and every second it will read some data. Moreover, it will track all the things that happen: connection success or failure, write success or failure, read success or failure.

As usual, I will use Python for the application because it is easy 🤷 and doesn't need compilation. I will pack it into a Docker container, so that it can run easily anywhere. I wanted to write step-by-step process of writing this application, but it went so long and diverged too much from the topic of this post. Find the description here: Monitoring application. Follow instructions there to test the application or just continue with the flow 😄.

I will connect with Systems Manager to the instance, clone the Git repository

and build the Docker image for the application. Then using Docker Compose I will

create two instances of the application, one for MySQL and one for PostgreSQL.

Both published ports (8080 and 8081 in my case) I will open on the security

groups to my home IP address. This step is optional if you want to see yourself

how the blue/green deployment affects RDS availability.

services:

web:

build:

context: .

dockerfile: Dockerfile

ports:

- "8080:8000"

environment:

- DB_HOST=mysql-database.abcdefghi.eu-west-2.rds.amazonaws.com

- DB_PORT=3306

- DB_USER=root

- DB_PASSWORD=xxx

- DB_NAME=my_database

- MONITOR_MODE=mysql

web2:

build:

context: .

dockerfile: Dockerfile

ports:

- "8081:8000"

environment:

- PG_HOST=postgresql-database.abcdefghi.eu-west-2.rds.amazonaws.com

- PG_PORT=5432

- PG_USER=root

- PG_PASSWORD=xxx

- PG_DB=postgresql_database

- MONITOR_MODE=postgresql

For the service to work, you need to create the tables in both databases. Log in to the database using the CLI tools that should be installed on the EC2 instance. Below you can find the two SQL scripts for MySQL and PostgreSQL that should be applied to the databases to make the application work. Especially important to apply this to PostgreSQL before creating the blue/green deployment as DDL clauses are not replicated.

USE my_database;

CREATE TABLE IF NOT EXISTS test_table (id INT PRIMARY KEY AUTO_INCREMENT, timestamp TIMESTAMP);

CREATE TABLE IF NOT EXISTS test_table (id INT GENERATED ALWAYS AS IDENTITY PRIMARY KEY, timestamp TIMESTAMP);

Apply both scripts to the databases and then check the app if the graphs show read and write successes. Enter password when prompted.

psql -h postgresql-database.abcdefghi.eu-west-2.rds.amazonaws.com -U root -d postgresql_database -f pgsql-init.sql -W

cat mysql-init.sql | mysql -h mysql-database.abcdefghi.eu-west-2.rds.amazonaws.com -u root -p

Creating the blue/green deployment

Finally we can create a blue/green deployment of both databases. After all it is supposed to be replicated so the same credentials should work as well as any row we create or update to should be copied over. I will show you both ways to create the blue/green deployment: AWS Console and AWS CLI.

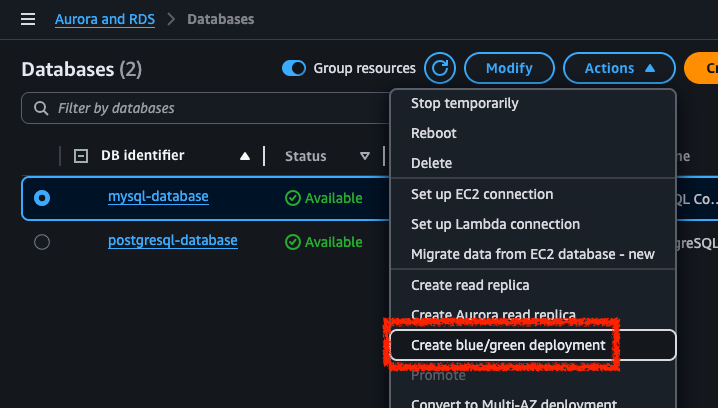

Open AWS Console in the region where you database is located (I chose eu-west-2) and search for RDS. If you database is still in "Creating" state then just wait until you see green "Available" status. Then select the database using radio button on the left and click "Actions" and next "Create blue/green deployment".

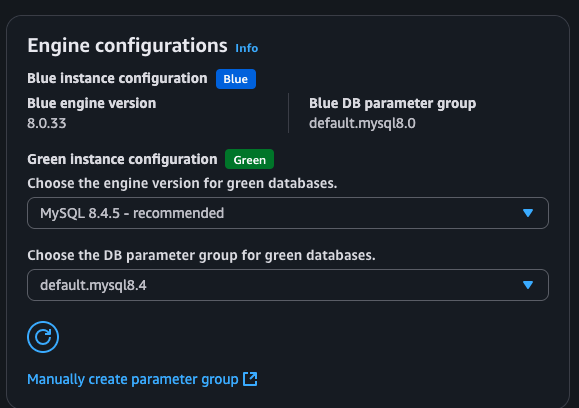

You will see a new form where you can customize the new database. I have just selected a different engine version (8.4.5). Proceed to create the deployment. During this process, I was observing the connectivity to the database using application from the previous section.

You can also create a blue/green deployment using AWS CLI with the following

command. Save BlueGreenDeploymentIdentifier from this command as you will need

it later.

$ # Get DB Instance ARN from CloudFormation or Terraform output

$ aws rds create-blue-green-deployment \

--blue-green-deployment-name mysql-blue-green-deployment \

--source arn:aws:rds:eu-west-2:012345678912:db:mysql-database \

--target-engine-version 8.4.5 \

--target-db-parameter-group-name default.mysql8.4 \

--region eu-west-2

{

"BlueGreenDeployment": {

"BlueGreenDeploymentIdentifier": "bgd-abcdef123efghijk",

...

The green database will be created and will have a new endpoint. When it's ready, you can use this endpoint to connect to this green replica. It will be read-only. Find it in the AWS Console in RDS section or query for the list of databases using CLI.

PostgreSQL

For PostgreSQL, I suggest doing it via the console. It will inform you on the

incompatibilities in the source database parameter group but also gives you

hints what is not supported for replication. You can learn more here:

PostgreSQL limitations for blue/green deployments with logical replication.

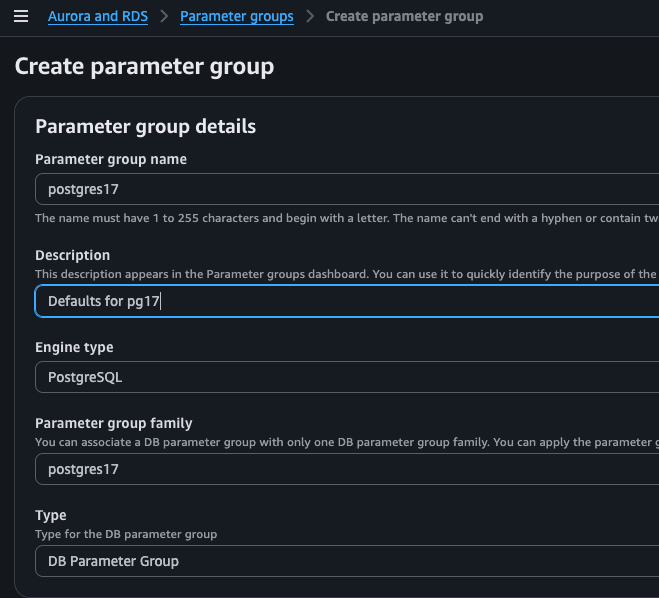

If you insist to do it using CLI, you have to modify the parameter group and set

rds.logical_replication = 1 on the blue database, so if you use default

parameter group, you have to first create a new custom one. Keep in mind that

this parameter requires reboot to be applied. Either way, you still need to

create a new parameter group for the green PostgreSQL database. You can leave

the defaults but the dialog doesn't create the default one for you.

Drifts in CloudFormation and Terraform

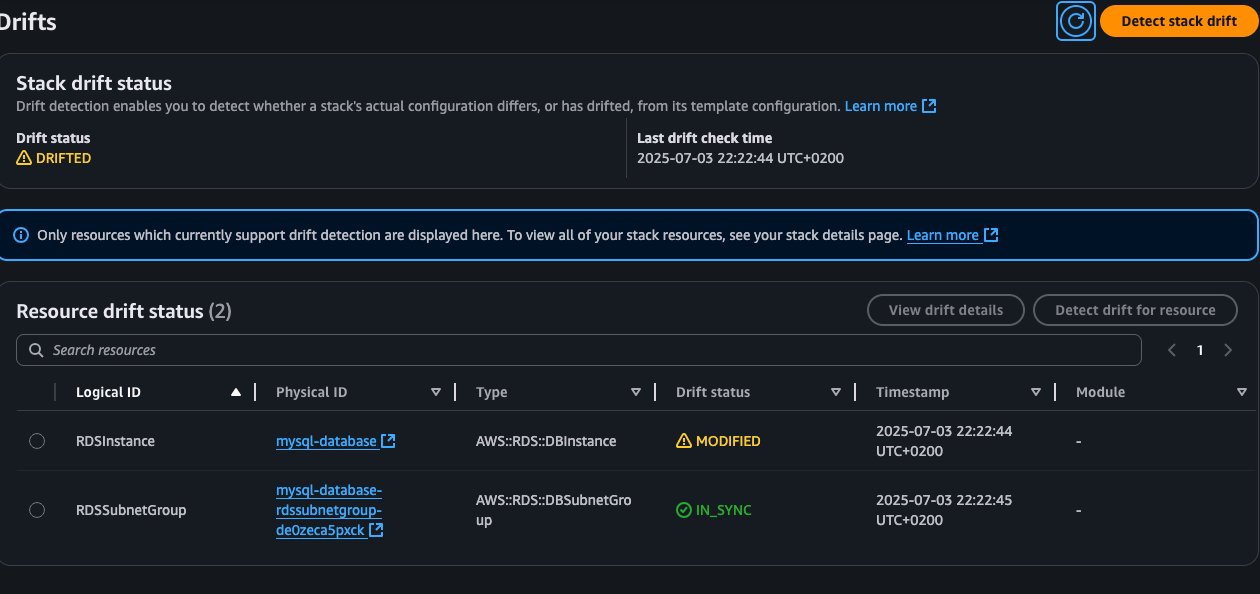

After you create the blue/green deployment, you can check if there are any drifts in IaC. So far nothing changed, the blue database is still the same even though it grouped is under the deployment. Same applies for Terraform.

Checking the replication

Once you have created the blue/green deployment, you can test if both databases

are in sync. Connect to the blue one using the CLI tools that are installed on

the experiment instance. In the monitoring application section we have created

a test_table that we can use to check. I will get the largest ID from the

table and then do the same for the green database - the resulting IDs should be

either equal or very close (it takes time to connect and execute the query). To

not be prompted for the password by psql, use PGPASSWORD environment

variable. You can reuse it for MySQL as well in the -p option.

$ read -s PGPASSWORD # Type the password on prompt

$ export PGPASSWORD

$ # Replace with your databases endpoints

$ psql -h postgresql-database.abcdefghi.eu-west-2.rds.amazonaws.com -U root -d postgresql_database -c "SELECT MAX(id) FROM test_table;" -w

max

-------

42215

(1 row)

$ psql -h postgresql-database-green-1234.abcdefghi.eu-west-2.rds.amazonaws.com -U root -d postgresql_database -c "SELECT MAX(id) FROM test_table;" -w

max

-------

42215

(1 row)

$ mysql -h mysql-database.abcdefghi.eu-west-2.rds.amazonaws.com -u root -D my_database -e "SELECT MAX(id) FROM test_table;" -p$PGPASSWORD

+---------+

| MAX(id) |

+---------+

| 1151 |

+---------+

$ mysql -h mysql-database-green-1234.abcdefghi.eu-west-2.rds.amazonaws.com -u root -D my_database -e "SELECT MAX(id) FROM test_table;" -p$PGPASSWORD

+---------+

| MAX(id) |

+---------+

| 1151 |

+---------+

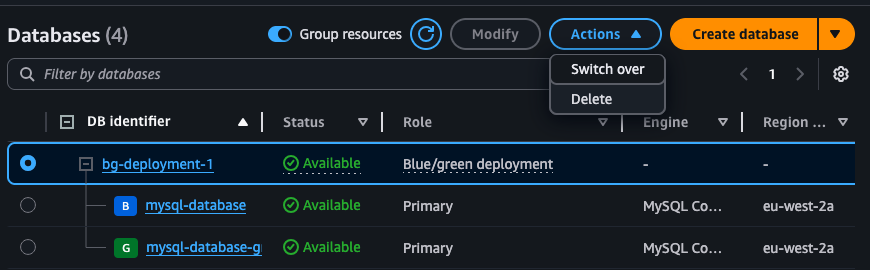

Performing switchover

Once the green database is ready and you tested using the new endpoint, you can perform a switchover. It is a simple operation that will swap the two databases. You can do it via the Console or CLI. With a brief downtime, you application will be able to use the new database without any changes (if it is compatible with the new version of the engine).

aws rds switchover-blue-green-deployment \

--blue-green-deployment-identifier bgd-abcdef123efghijk \

--region eu-west-2

Availability during blue/green creation

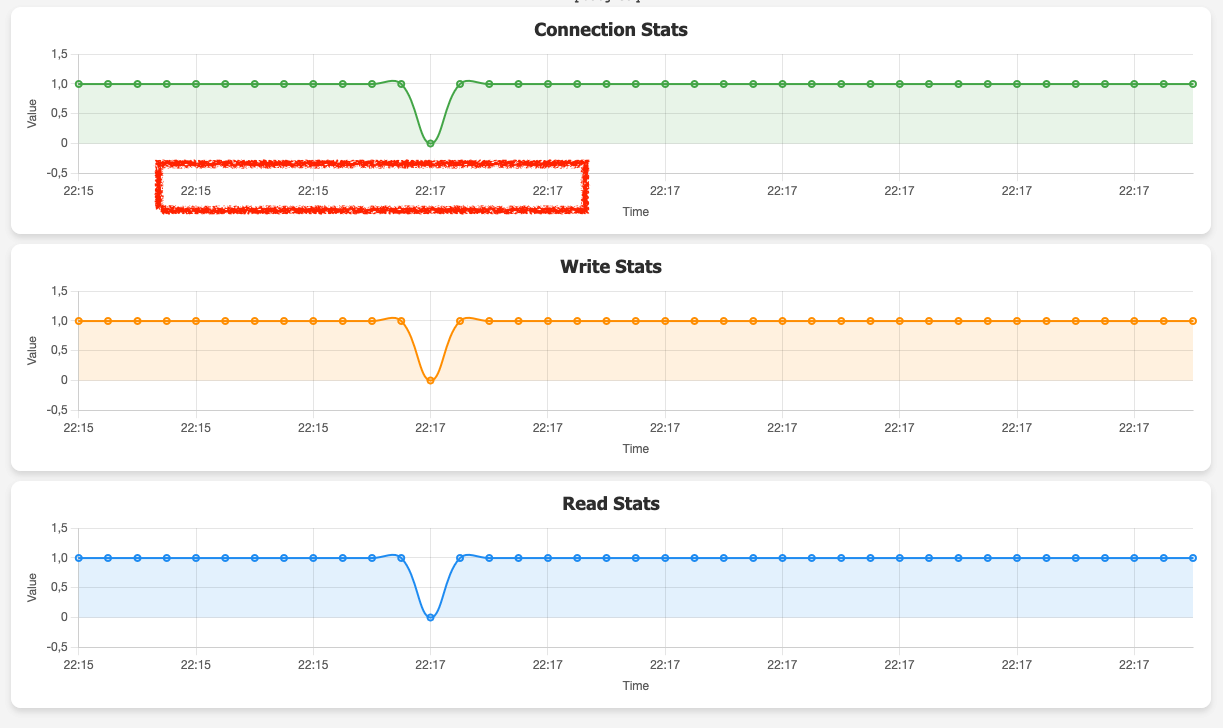

During the creation of the MySQL blue/green deployment, there's no downtime at all for the whole 20 minutes it took to finish the process. During switchover, one of the writes failed due to connection timing out, so either way you have to implement a retry and backoff mechanism in your code.

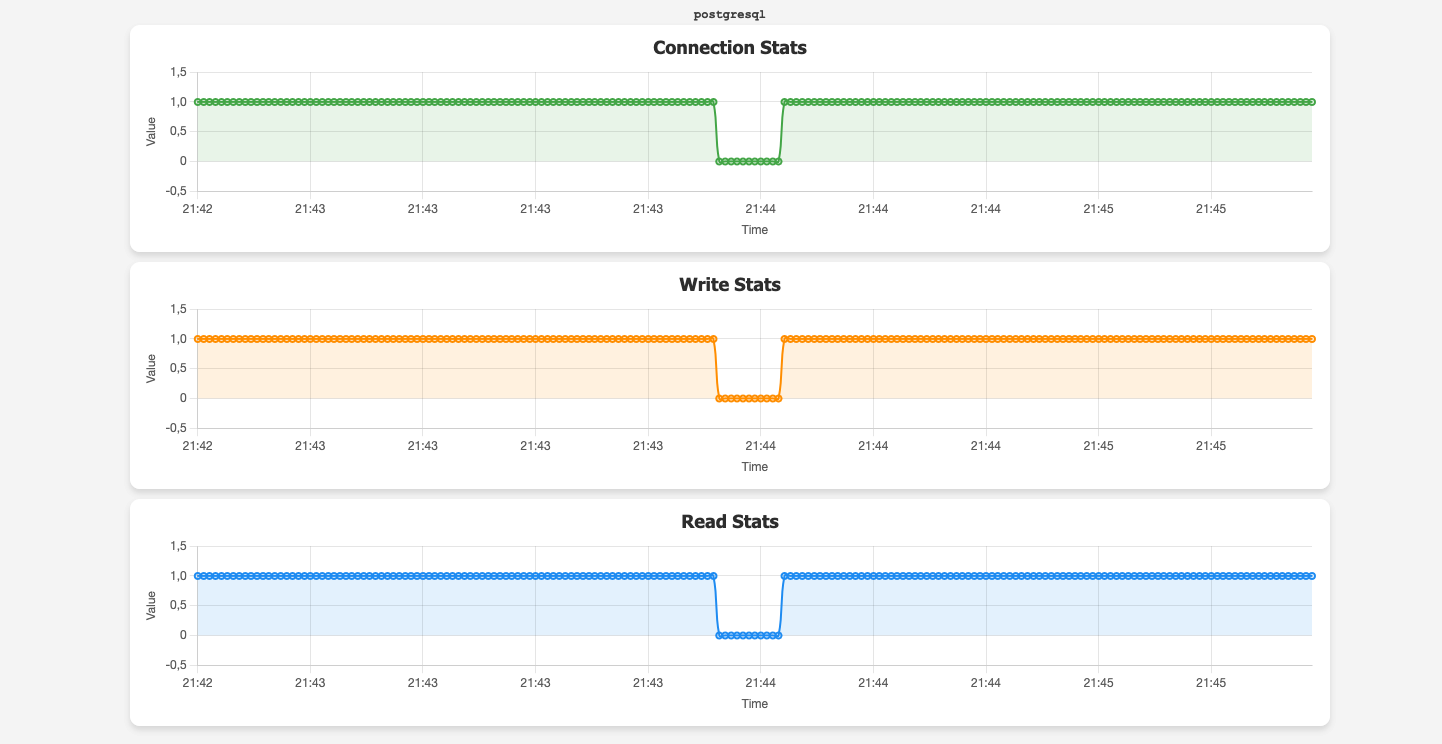

During the creation of PostgreSQL blue/green deployment, as mentioned earlier, you have to change the parameter group to enable logical replication. For my instance, it took around 10 seconds on reboot as you see on the graph below.

However, blue/green deployment itself did not cause any downtime, the database remained available for the application for the whole time. Switchover on the other hand caused the process to hang and the application lagged for less than two minutes!

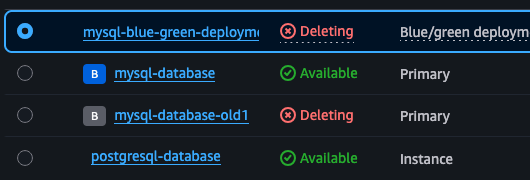

Cleaning up

You can clean up the resources you have created using the AWS Console or CLI. In the Console it looks like this: delete the old database (marked with gray B) as well as the deployment. Do not worry, deleting the deployment does not delete the nested databases in the UI 😆. The same way you can do it for PostgreSQL.

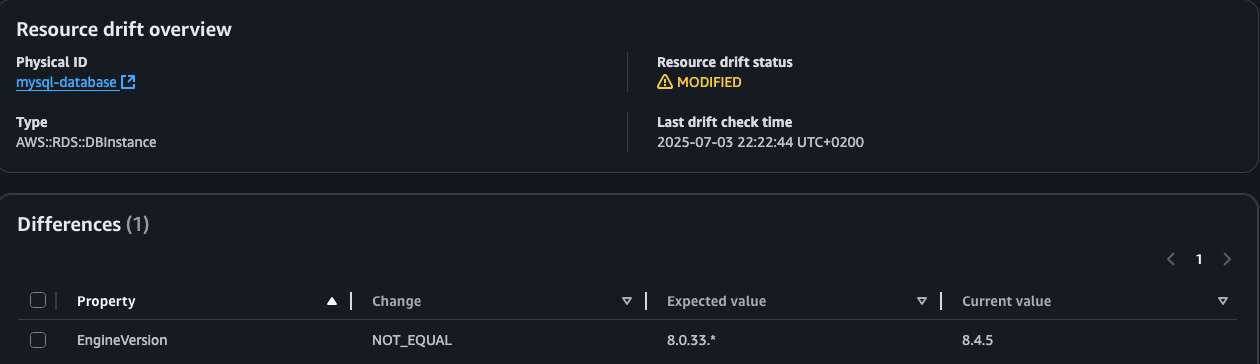

Checking the changes

When you detect drift in CloudFormation it will show the change. Just copy it over to the template and update the stack. Nothing will happen to the database (as long as this is the only change).

In Terraform you have to be more careful.

If you run tofu plan when the old database is still existing, it will show you

the following:

module.postgresql.aws_db_instance.postgresql: Refreshing state... [id=db-ABCDEFGHIJ1234567890ABCDEF]

...

# module.postgresql.aws_db_instance.postgresql will be updated in-place

~ resource "aws_db_instance" "postgresql" {

id = "db-ABCDEFGHIJ1234567890ABCDEF"

~ identifier = "postgresql-database-old1" -> "postgresql-database"

tags = {}

# (55 unchanged attributes hidden)

}

It means that the name of the old database will be changed to a new one.

However, once the deletion process is finished in AWS, it magically changes to

the new database with next tofu plan without doing anything to the state 🧐:

module.postgresql.aws_db_instance.postgresql: Refreshing state... [id=db-ABCDEFGHIJ1234567890ABCDEF]

...

# module.postgresql.aws_db_instance.postgresql will be updated in-place

~ resource "aws_db_instance" "postgresql" {

~ engine_version = "17.5" -> "13.21"

id = "db-ZXYW123456TUVWXYZ123456789"

tags = {}

# (56 unchanged attributes hidden)

}

The state still refers to the old database instance ID but it is somehow relayed to the new one. As soon as I change the engine version to the new one, it shows no changes, even without applying. On the other hand, when I made an unrelated change, the new ID for PostgreSQL was updated in the state without any prompts.

...

module.postgresql.aws_db_instance.postgresql: Refreshing state... [id=db-ZXYW123456TUVWXYZ123456789]

...

Conclusion

So it is clear now. Doing blue/green deployment is pretty easy, even when you use an IaC tool. The only caveat is that the new database is just a reader but in most cases it should be enough proof that the new version works. After all, you should have a stack with local database in the new version that you can perform integration tests on (for example Docker Compose).