Faster ECS startup - building SOCI Index in GitLab Pipeline

17 June 2025

Building SOCI containers in a CI/CD pipeline when your control over the environment is restricted was pretty challenging. I went through many trials and errors and eventually came up with a solution that looked very difficult at first. I will first give you a brief story of all the struggles, limitations and problems I faced. If you don't like stories, just skip to the Implementation part of this post.

⚠️ Note, June 2025: Currently usage of SOCI indexes is disabled in ECS by default. Back in 2024 it was working out of the box, now you need to reach out to AWS Support and ask them to enable it on the account. Whether it has to be account where ECS Service resides or ECR Repository is not yet conclusive to me. There's no flag indicating whether it is enabled, it's a hidden feature. The only test you can do is try starting ECS container with SOCI index and look into the task metadata. See Testing section for more info.

The beginnings

Back in December, my colleague Abhi reached out to me with an idea he saw in one of AWS presentations about speeding up the ECS deployment process by using SOCI indexes on the images in ECR. We had a loose ticket to research it. So I thought that it is a good idea for a blog post. And I looked around and created this post: Speeding up ECS containers with SOCI. But this solution was running on a local machine or an EC2 instance. We use Kubernetes to run our GitLab runners, so this could be treated out of scope. I had no idea how to run soci-snapshotter with just Docker-in-Docker service. During the research, I found out that AWS distributes this Lambda based tool that indexes all the images in ECR when EventBridge emits a push event. This tool would have simplified everything and wouldn't have involved GitLab at all.

However, there were two problems with this solution:

- This tool was new and nobody knew anything about it, are there any side effects or anything else that could go wrong. We could potentially limit the scope of this tool by modifying the EventBridge rule to only include one sample repository, but...

- we are not the team that is responsible for infra where all ECR repositories are created, so another team would need to maintain this solution.

What was left for me was to create a proof of concept with what I have: GitLab and Docker-in-Docker.

The first attempt

SOCI Snapshotter requires containerd runtime. Docker is not containerd. And

I didn't find any official image for plain containerd. But I remembered that

after all, at some point Docker started using containerd as the default. So

since some years it must be somewhere there. I fired up the image and looked

around.

$ docker run --rm -it docker:dind /bin/sh

$ ls -l /usr/local/bin

total 200360

-rwxr-xr-x 1 root root 39256216 Apr 18 09:53 containerd

-rwxr-xr-x 1 root root 12976280 Apr 18 09:53 containerd-shim-runc-v2

-rwxr-xr-x 1 root root 19792024 Apr 18 09:53 ctr

It is there! If I modify command for the GitLab service I can run just

containerd without Docker. Now when I added the script for soci-snapshotter

and specified the new service container with a port1, I should be able to at

least list indexes even though I expect the list to be empty.

...

index-image:

stage: build

needs: [build-image]

image: docker:28

services:

- name: docker:28-dind

command:

- 'containerd'

- '--address'

- '0.0.0.0:8888'

before_script:

- wget -O /tmp/soci.tar.gz https://github.com/awslabs/soci-snapshotter/releases/download/v0.9.0/soci-snapshotter-0.9.0-linux-amd64-static.tar.gz

- tar -C /usr/local/bin/ -xf /tmp/soci.tar.gz

- mkdir -p /var/lib/soci-snapshotter-grpc

script:

- soci -a docker:8888 index list

- soci -a tcp://docker:8888 index list

...

$ soci -a docker:8888 index list

soci: failed to dial "docker:8888": failed to build resolver: invalid (non-empty) authority: docker:8888

$ soci -a tcp://docker:8888 index list

soci: failed to dial "tcp://docker:8888": failed to build resolver: invalid (non-empty) authority: tcp:

Well, even though you can start containerd on a TCP port, most tools expect

the address to be a Unix socket.

For more information, you can see the

issue on GitHub

and the latest commit

for ctr tool that still uses only sockets.

Passing the sockets

So as we are out of luck with TCP connections, let's try to pass the socket

between the containers. In order to share data between the containers, I need a

volume. In order to have a volume, I have to reconfigure the GitLab runners. For

that I would need to reach out to the other team and beg them to do this and

that, as usual, would be a process that can "break something". BUT! Luckily,

there's one volume that is ALWAYS mounted by GitLab to all the containers:

/builds. This is the directory where all the code is cloned. I can simply put

the socket there!

...

index-image:

stage: build

needs: [build-image]

image: docker:28

services:

- name: docker:28-dind

command:

- 'containerd'

- '--address'

- '/builds/containerd.sock'

before_script:

- wget -O /tmp/soci.tar.gz https://github.com/awslabs/soci-snapshotter/releases/download/v0.9.0/soci-snapshotter-0.9.0-linux-amd64-static.tar.gz

- tar -C /usr/local/bin/ -xf /tmp/soci.tar.gz

- mkdir -p /var/lib/soci-snapshotter-grpc

script:

- soci -a /builds/containerd.sock index list

$ soci -a /builds/containerd.sock index list

DIGEST SIZE IMAGE REF PLATFORM MEDIA TYPE CREATED

It worked 🥳! Let's pull the image I have built in the previous job in the pipeline using ctr and create an index for it, this will be straightforward.

$ ctr -a /builds/containerd.sock image pull ${ECR_REPOSITORY}:${IMAGE_TAG}

...

unpacking linux/amd64 sha256:193c73fd0042600355faec57992cc42e9dbfff992c6455cccec34a9ccbf87736...

time="2025-04-28T16:57:01Z" level=info msg="apply failure, attempting cleanup" error="failed to extract layer

sha256:0c41e94dbf3f73146363caf58d9b3ca35d137b751d64035c3e33d4092fe878b1: failed to convert whiteout file

\"etc/nginx/conf.d/.wh.default.conf\": operation not permitted: unknown" key="extract-952335201-Ui-y

sha256:babcfd9a38486c729abdece6dea338302ad8f7b4e2a58f65b9cb9a5430fb907c"

ctr: failed to extract layer sha256:0c41e94dbf3f73146363caf58d9b3ca35d137b751d64035c3e33d4092fe878b1: failed

to convert whiteout file "etc/nginx/conf.d/.wh.default.conf": operation not permitted: unknown

What just happened 🤯?! It turns out our images are built on the legacy AUFS.

Even when setting --feature containerd-snapshotter on Docker daemon and

installing fuse-overlayfs before containerd did not change the behavior.

Installing rootless would also take a lot of time and effort as it uses systemd

(unless I'd dug long enough into this as well 😣).3 However...

What does this Lambda do?

At the beginning of this story, I gave you the link to

this Lambda based tool.

I needed to know, how and if they start the containerd daemon. So I followed

the trail and found the actual function written in Go. It is a simple function,

no daemons, just plain Go code without too many goroutines. As a result, I

converted this function to become a standalone binary that can be run inside my

GitLab container. I will describe it in a moment in the Implementation but first

let's do some more theory.

Meet ORAS

What even is a container repository? What magic sits behind it that allows to push layers and some manifests and some jsons? If you know Artifactory or JFrog, this will lead you into a good direction.

A container registry whether it is ECR, Docker Hub or Quay is nothing more than

an HTTP server for uploading and downloading files. Really, just PUT, POST

and GET (and maybe DELETE). And this server holds files: layers and JSONs.

Layers are just tar archives that contain container files. JSONs describe the

content, hashes and other metadata such as CMD, ENTRYPOINT of the image,

can be multi-arch manifests, or SOCI indexes. (It works very similar to package

managers such as npm or apt.) In order to download an image, you first need

to fetch a JSON manifest file that contains list of tar files to download.

You can obviously do it with a script but there's a tool for that.

ORAS is a tool that allows you to push and pull OCI

artifacts in container registries. It comes in a form of a CLI tool and multiple

libraries. This is the thing that was used by the Lambda function to "pull", or

rather download each layer (tar file) and index it (create a list of files

with byte offsets). SOCI does not need to convert the image, it operates purely

on the layers and files within them. It highly simplifies the workflow.

Implementation

I've created a new repository with the fork of cfn-ecr-aws-soci-index-builder.

You can find it here:

ecr-aws-soci-index-builder.

You can see the diff how I converted the Lambda function to a standalone

application here:

ecr-aws-soci-index-builder/compare/0de73cb..0d8f4c1.

Eventually, I removed all the traces of Lambda, moved the function files to

upper directories and deleted unnecessary files. What is more, I upgraded the

dependencies to more refreshed versions 2. The repository I linked should be

built with Docker and pushed to your registry, whether on GitLab or ECR - as

long as you GitLab Runner has access to pull it.

Now with the new tool, I can simply run it in the GitLab pipeline as a container

by just changing the entrypoint and use soci-index-build to run it when

necessary. Yet comes the time for testing! I used the same image as in the

previous post:

Speeding up ECS containers with SOCI.

I built this image only using Docker, pushed it to the registry and measured

startup time multiple times. Then I created the SOCI index for it and measured

again. It simplifies the setup because we don't need to run any sidecars.

Assuming that you build your Docker image in a job named build-image, another

job like this should follow it:

index-image:

variables: # Region is needed if it's unset globally

AWS_REGION: eu-west-3

AWS_DEFAULT_REGION: eu-west-3

stage: build

needs: [build-image]

image:

name: ${SOCI_INDEX_BUILD_IMAGE}

entrypoint: ["/bin/sh", "-c"]

script:

- soci-index-build -repository ${ECR_REPOSITORY}:${IMAGE_TAG}

As long as you have valid AWS credentials anywhere in the process (you can use CI/CD variables or I highly suggest OIDC authentication), this should automatically pull the image, create index and push the index to the same ECR repo.

🧪 Testing

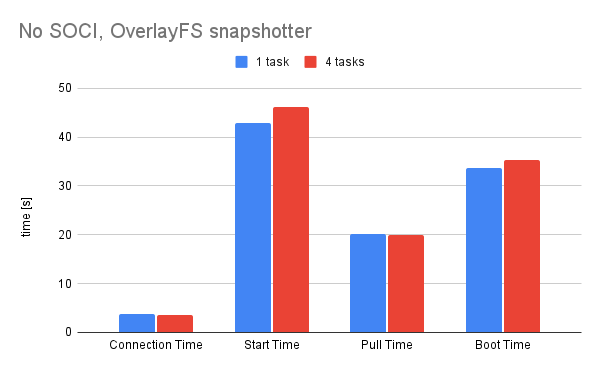

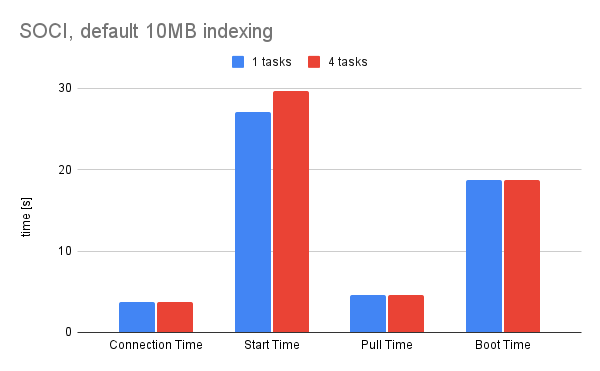

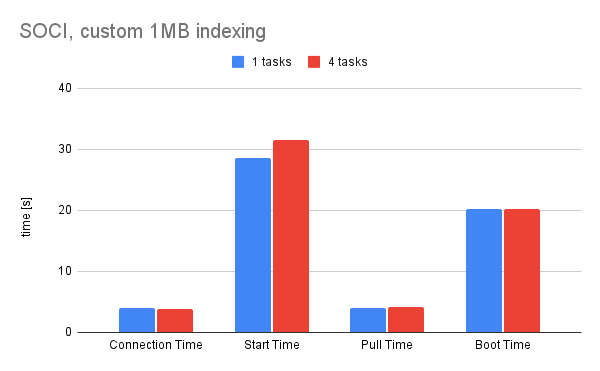

So, I have created the pipeline that first built the image without SOCI index. I

performed some startup tests on it with 1 task and 4 tasks at once and took

averages. Then I generated index for the default settings where only layers that

have more then 10 MB are indexed and then lowered the setting to 1 MB using

-min-layer-size flag. Below I show the average timings for the pure image

without the index.

When there's no index for the image the pull time takes around 20 seconds and the start time around 45 for this particular image. I generated the index in the pipeline using the newly implemented tool and verified that the service is running the correct image before doing measurements. In one of the startup scripts I added the following line:

echo -n "return 200 \"" > /etc/nginx/status.conf

curl -X GET ${ECS_CONTAINER_METADATA_URI_V4}/task | sed 's/"/\\"/g' >> /etc/nginx/status.conf

echo -n "\";" >> /etc/nginx/status.conf

The file status.conf was loaded by Nginx when location /status was

requested. I used curl to get the value of snapshotter in the JSON returned

by this URL. If it returns "soci", that means that the index was used. If it

returns "overlayfs", that means either you don't have a correct index for that

image or you need to reach out to AWS Support to enable it for your account.

$ curl http://test-image-lb-1234567890.eu-west-1.elb.amazonaws.com/status \

| jq ".Containers[0].Snapshotter"

"soci"

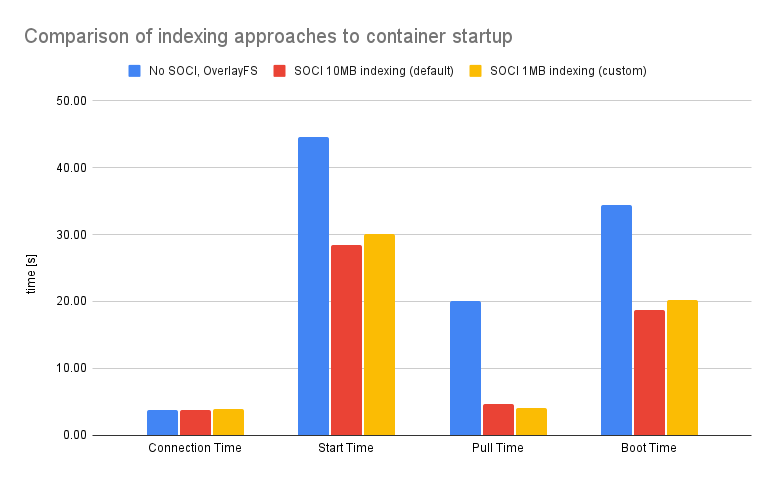

With that verified, I can continue doing measurements. I ran two pipelines as mentioned for the default 10 MB layer size and for custom 1 MB layer size. The timings are very similar to each other, so it is debatable if tweaking this value is worth the effort.

Both of the solutions reach 30 second start time and sub 5 second pull time, which is either way a huge (30%) improvement compared to the OverlayFS snapshotter. That being said, let's visually compare both of the options to see the difference. I took averages of both timings for task counts.

The difference is pretty drastic. With this particular image the benefits would be huge. However, it's not necessary with each setup. If your image is built from scratch and contains barely one layer with very minimal set of files, you are likely to not benefit too much from the seekable image.

-

I also tried connecting to the address at

docker:2376but learned that this port is for REST API of Docker and not gRPC like containerd 😅. ↩ -

Unfortunately, I had to leave

soci-snapshotterat the latest compatible version0.6.1because in0.7.0a breaking change was introduced whereWriteSociIndexfunction was changed to private and refactored insoci_index.go. ↩ -

Yes, I could have used Kaniko but then I would need to onboard each team to it and we want to avoid as much friction on the developers' side as we can when doing this. This is one of the principles of DevOps. ↩